您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

如何使用storageclass實現動態pv?針對這個問題,今天小編總結這篇有關storageclass實踐的文章,希望幫助更多想解決這個問題的同學找到更加簡單易行的辦法。

在部署nfs-client-provisioner前,我們需要先準備好nfs存儲服務器并在所有node節點上安裝

nfs服務器:192.168.248.139

共享存儲目錄:/data/nfs

nfs-client-provisioner部署文件

vim nfs-client-provisioner.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: nfs-client-provisioner namespace: default spec: replicas: 1 selector: matchLabels: app: nfs-client-provisioner strategy: type: Recreate template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner image: quay.azk8s.cn/external_storage/nfs-client-provisioner:latest volumeMounts: - name: timezone mountPath: /etc/localtime - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: fuseim.pri/ifs - name: NFS_SERVER value: 192.168.248.139 - name: NFS_PATH value: /data/nfs volumes: - name: timezone hostPath: path: /usr/share/zoneinfo/Asia/Shanghai - name: nfs-client-root nfs: server: 192.168.248.139 path: /data/nfs

Storageclass部署文件

vim nfs-client-class.yaml

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: managed-nfs-storage annotations: storageclass.kubernetes.io/is-default-class: "true" #設置其為默認存儲后端 provisioner: fuseim.pri/ifs #or choose another name, must match deployment's env PROVISIONER_NAME' parameters: archiveOnDelete: "false" #刪除pvc后,后端存儲上的pv也自動刪除

rbac授權文件

vim nfs-client-rbac.yaml

kind: ServiceAccount apiVersion: v1 metadata: name: nfs-client-provisioner --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner namespace: default roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner namespace: default roleRef: kind: Role name: leader-locking-nfs-client-provisioner apiGroup: rbac.authorization.k8s.io

準備好以上三個文件后,使用kubectl apply命令應用即可完成nfs-client-provisioner的部署。

[root@k8s-master-01 Dynamic-pv]# kubectl apply -f . storageclass.storage.k8s.io/managed-nfs-storage created deployment.apps/nfs-client-provisioner created serviceaccount/nfs-client-provisioner created clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

查看pod運行狀態和sc

[root@k8s-master-01 Dynamic-pv]# kubectl get pod,sc NAME READY STATUS RESTARTS AGE pod/nfs-client-provisioner-c676947d-pfpms 1/1 Running 0 107s NAME PROVISIONER AGE storageclass.storage.k8s.io/managed-nfs-storage (default) fuseim.pri/ifs 108s

可以看到nfs-client-provisioner已經正常運行,sc已經創建成功。接下來我們測試創建幾個pvc

vim mysql-pvc.yaml

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: mysql-01-pvc # annotations: # volume.beta.kubernetes.io/storage-class: "managed-nfs-storage" spec: accessModes: ["ReadWriteMany"] resources: requests: storage: 10Gi --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: mysql-02-pvc spec: accessModes: ["ReadWriteMany"] resources: requests: storage: 5Gi --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: mysql-03-pvc spec: accessModes: ["ReadWriteMany"] resources: requests: storage: 3Gi

[root@k8s-master-01 Dynamic-pv]# kubectl apply -f mysql-pvc.yaml persistentvolumeclaim/mysql-01-pvc created persistentvolumeclaim/mysql-02-pvc created persistentvolumeclaim/mysql-03-pvc created [root@k8s-master-01 Dynamic-pv]# kubectl get pvc,pv NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/mysql-01-pvc Bound pvc-eef853e1-f8d8-4ab9-bfd3-05c2a58fd9dc 10Gi RWX managed-nfs-storage 2m54s persistentvolumeclaim/mysql-02-pvc Bound pvc-fc0b8228-81c0-4d91-83b0-6bb20ab37cc3 5Gi RWX managed-nfs-storage 2m54s persistentvolumeclaim/mysql-03-pvc Bound pvc-c6739d7d-4930-49bd-975f-04bffc05dfd6 3Gi RWX managed-nfs-storage 2m54s NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE persistentvolume/pvc-c6739d7d-4930-49bd-975f-04bffc05dfd6 3Gi RWX Delete Bound default/mysql-03-pvc managed-nfs-storage 2m54s persistentvolume/pvc-eef853e1-f8d8-4ab9-bfd3-05c2a58fd9dc 10Gi RWX Delete Bound default/mysql-01-pvc managed-nfs-storage 2m54s persistentvolume/pvc-fc0b8228-81c0-4d91-83b0-6bb20ab37cc3 5Gi RWX Delete Bound default/mysql-02-pvc managed-nfs-storage 2m54s

可以看到pvc已經創建成功,并自動創建了一個關聯的pv資源對象。我們再查看后端存儲目錄里面是否生成了對應命名格式的pv

[root@localhost nfs]# pwd /data/nfs [root@localhost nfs]# ll total 12 drwxrwxrwx 2 root root 4096 Feb 20 10:05 default-mysql-01-pvc-pvc-eef853e1-f8d8-4ab9-bfd3-05c2a58fd9dc drwxrwxrwx 2 root root 4096 Feb 20 10:05 default-mysql-02-pvc-pvc-fc0b8228-81c0-4d91-83b0-6bb20ab37cc3 drwxrwxrwx 2 root root 4096 Feb 20 10:05 default-mysql-03-pvc-pvc-c6739d7d-4930-49bd-975f-04bffc05dfd6

可以看到下面有名字很長的文件夾,這個文件夾的命名方式是不是和我們上面的規則:${namespace}-${pvcName}-${pvName}是一樣的,結果符合我們的預期。

接下來我們部署一個mysql應用,測試下 StorageClass 方式聲明的 PVC 對象

cat mysql-config.yaml apiVersion: v1 kind: ConfigMap metadata: name: mysql-config data: custom.cnf: | [mysqld] default_storage_engine=innodb skip_external_locking skip_host_cache skip_name_resolve default_authentication_plugin=mysql_native_password

cat mysql-secret.yaml apiVersion: v1 kind: Secret metadata: name: mysql-user-pwd data: mysql-root-pwd: cGFzc3dvcmQ=

cat mysql-deploy.yaml apiVersion: v1 kind: Service metadata: name: mysql spec: type: NodePort ports: - port: 3306 nodePort: 30006 protocol: TCP targetPort: 3306 selector: app: mysql --- apiVersion: apps/v1 kind: Deployment metadata: name: mysql spec: replicas: 1 selector: matchLabels: app: mysql strategy: type: Recreate template: metadata: labels: app: mysql spec: containers: - image: mysql name: mysql imagePullPolicy: IfNotPresent env: - name: MYSQL_ROOT_PASSWORD valueFrom: secretKeyRef: name: mysql-user-pwd key: mysql-root-pwd ports: - containerPort: 3306 name: mysql volumeMounts: - name: mysql-config mountPath: /etc/mysql/conf.d/ - name: mysql-persistent-storage mountPath: /var/lib/mysql - name: timezone mountPath: /etc/localtime volumes: - name: mysql-config configMap: name: mysql-config - name: timezone hostPath: path: /usr/share/zoneinfo/Asia/Shanghai - name: mysql-persistent-storage persistentVolumeClaim: claimName: mysql-01-pvc

[root@k8s-master-01 yaml]# kubectl apply -f . configmap/mysql-config created service/mysql created deployment.apps/mysql created secret/mysql-user-pwd created [root@k8s-master-01 yaml]# kubectl get pod,svc NAME READY STATUS RESTARTS AGE pod/mysql-7c5b5df54c-vrnr8 1/1 Running 0 83s pod/nfs-client-provisioner-c676947d-pfpms 1/1 Running 0 30m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 93d service/mysql NodePort 10.0.0.19 <none> 3306:30006/TCP 83s

可以看到mysql應用已經正常運行,我們通過任意一個node節點的ip和30006端口連接mysql數據庫測試

[root@localhost ~]# mysql -uroot -h292.168.248.134 -P30006 -p Enter password: Welcome to the MariaDB monitor. Commands end with ; or \g. Your MySQL connection id is 10 Server version: 8.0.19 MySQL Community Server - GPL Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MySQL [(none)]> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | performance_schema | | sys | +--------------------+ 4 rows in set (0.01 sec) MySQL [(none)]>

可以看到mysql數據庫連接正常。此時查看nfs存儲,mysql數據庫數據已經持久化到nfs服務器/data/nfs/default-mysql-01-pvc-pvc-eef853e1-f8d8-4ab9-bfd3-05c2a58fd9dc目錄中

[root@localhost nfs]# du -sh * 177M default-mysql-01-pvc-pvc-eef853e1-f8d8-4ab9-bfd3-05c2a58fd9dc 4.0K default-mysql-02-pvc-pvc-fc0b8228-81c0-4d91-83b0-6bb20ab37cc3 4.0K default-mysql-03-pvc-pvc-c6739d7d-4930-49bd-975f-04bffc05dfd6 [root@localhost nfs]# cd default-mysql-01-pvc-pvc-eef853e1-f8d8-4ab9-bfd3-05c2a58fd9dc/ [root@localhost default-mysql-01-pvc-pvc-eef853e1-f8d8-4ab9-bfd3-05c2a58fd9dc]# ls auto.cnf binlog.index client-cert.pem ibdata1 ibtmp1 mysql.ibd public_key.pem sys binlog.000001 ca-key.pem client-key.pem ib_logfile0 #innodb_temp performance_schema server-cert.pem undo_001 binlog.000002 ca.pem ib_buffer_pool ib_logfile1 mysql private_key.pem server-key.pem undo_002

另外我們可以看到我們這里是手動創建的一個 PVC 對象,在實際工作中,使用 StorageClass 更多的是 StatefulSet 類型的服務,StatefulSet 類型的服務我們也可以通過一個 volumeClaimTemplates 屬性來直接使用 StorageClass,如下

vim web.yaml

apiVersion: v1 kind: Service metadata: name: nginx labels: app: nginx spec: ports: - port: 80 name: web clusterIP: None selector: app: nginx --- apiVersion: apps/v1 kind: StatefulSet metadata: name: web spec: serviceName: "nginx" replicas: 8 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx imagePullPolicy: IfNotPresent ports: - containerPort: 80 name: web volumeMounts: - name: www mountPath: /usr/share/nginx/html volumeClaimTemplates: - metadata: name: www spec: accessModes: [ "ReadWriteOnce" ] resources: requests: storage: 10Gi

直接創建上面的對象

[root@k8s-master-01 Dynamic-pv]# kubectl apply -f web.yaml service/nginx created statefulset.apps/web created [root@k8s-master-01 Dynamic-pv]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nfs-client-provisioner-c676947d-wzwhh 1/1 Running 0 41m 10.244.0.176 k8s-node-01 <none> <none> web-0 1/1 Running 0 32s 10.244.1.167 k8s-node-02 <none> <none> web-1 1/1 Running 0 31s 10.244.0.188 k8s-node-01 <none> <none> web-2 1/1 Running 0 29s 10.244.1.168 k8s-node-02 <none> <none> web-3 1/1 Running 0 27s 10.244.0.189 k8s-node-01 <none> <none> web-4 1/1 Running 0 24s 10.244.1.169 k8s-node-02 <none> <none> web-5 1/1 Running 0 22s 10.244.0.190 k8s-node-01 <none> <none> web-6 1/1 Running 0 21s 10.244.1.170 k8s-node-02 <none> <none> web-7 1/1 Running 0 19s 10.244.0.191 k8s-node-01 <none> <none>

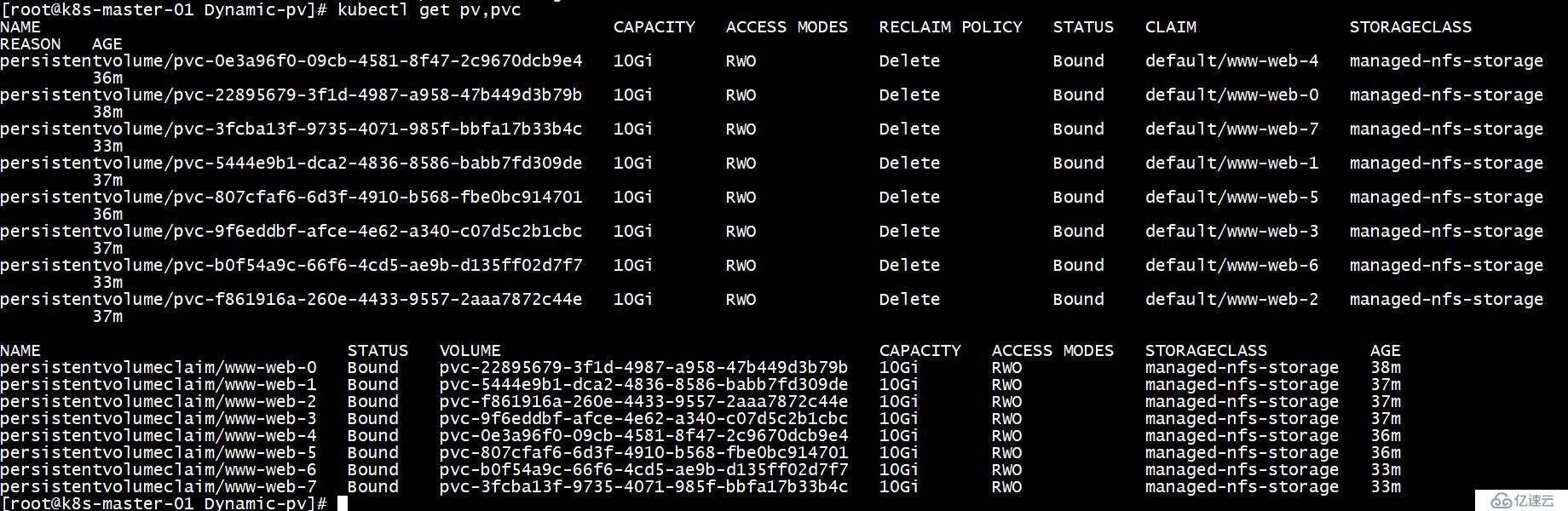

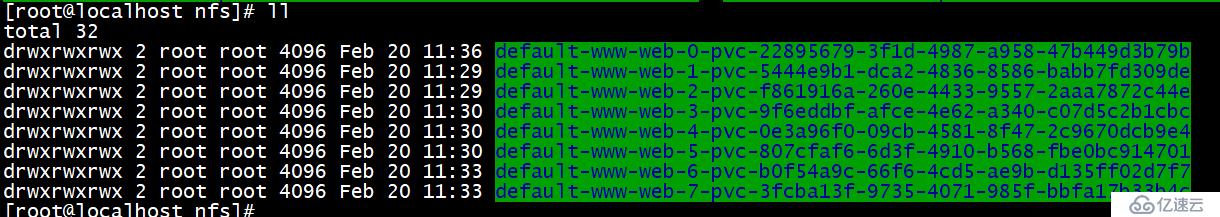

查看存儲上的數據目錄

可以看出可以自動動態的分配nfs存儲卷。以上即為k8s持久化存儲之storageclass實踐。

以上就是使用storageclass實現動態pv的具體步驟,內容較為全面,而且我也相信有相當的一些工具可能是我們日常工作可能會見到或用到的。通過這篇文章,希望你能收獲更多。

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。