您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

這篇文章主要介紹“Redis+Caffeine如何實現分布式二級緩存組件”的相關知識,小編通過實際案例向大家展示操作過程,操作方法簡單快捷,實用性強,希望這篇“Redis+Caffeine如何實現分布式二級緩存組件”文章能幫助大家解決問題。

緩存就是將數據從讀取較慢的介質上讀取出來放到讀取較快的介質上,如磁盤-->內存。

平時我們會將數據存儲到磁盤上,如:數據庫。如果每次都從數據庫里去讀取,會因為磁盤本身的IO影響讀取速度,所以就有了像redis這種的內存緩存。可以將數據讀取出來放到內存里,這樣當需要獲取數據時,就能夠直接從內存中拿到數據返回,能夠很大程度的提高速度。

但是一般redis是單獨部署成集群,所以會有網絡IO上的消耗,雖然與redis集群的鏈接已經有連接池這種工具,但是數據傳輸上也還是會有一定消耗。所以就有了進程內緩存,如:caffeine。當應用內緩存有符合條件的數據時,就可以直接使用,而不用通過網絡到redis中去獲取,這樣就形成了兩級緩存。應用內緩存叫做一級緩存,遠程緩存(如redis)叫做二級緩存。

系統是否需要緩存CPU占用:如果你有某些應用需要消耗大量的cpu去計算獲得結果。

數據庫IO占用:如果你發現你的數據庫連接池比較空閑,那么不應該用緩存。但是如果數據庫連接池比較繁忙,甚至經常報出連接不夠的報警,那么是時候應該考慮緩存了。

Redis用來存儲熱點數據,Redis中沒有的數據則直接去數據庫訪問。

已經有Redis了,干嘛還需要了解Guava,Caffeine這些進程緩存呢:

Redis如果不可用,這個時候我們只能訪問數據庫,很容易造成雪崩,但一般不會出現這種情況。

訪問Redis會有一定的網絡I/O以及序列化反序列化開銷,雖然性能很高但是其終究沒有本地方法快,可以將最熱的數據存放在本地,以便進一步加快訪問速度。這個思路并不是我們做互聯網架構獨有的,在計算機系統中使用L1,L2,L3多級緩存,用來減少對內存的直接訪問,從而加快訪問速度。

所以如果僅僅是使用Redis,能滿足我們大部分需求,但是當需要追求更高的性能以及更高的可用性的時候,那就不得不了解多級緩存。

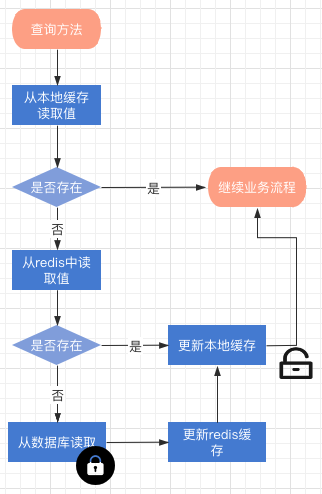

二級緩存操作過程數據讀流程描述

redis 與本地緩存都查詢不到值的時候,會觸發更新過程,整個過程是加鎖的緩存失效流程描述

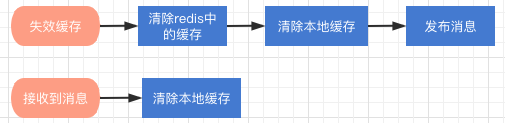

redis更新與刪除緩存key都會觸發,清除redis緩存后

組件是基于Spring Cache框架上改造的,在項目中使用分布式緩存,僅僅需要在緩存注解上增加:cacheManager ="L2_CacheManager",或者 cacheManager = CacheRedisCaffeineAutoConfiguration.分布式二級緩存

//這個方法會使用分布式二級緩存來提供查詢

@Cacheable(cacheNames = CacheNames.CACHE_12HOUR, cacheManager = "L2_CacheManager")

public Config getAllValidateConfig() {

}如果你想既使用分布式緩存,又想用分布式二級緩存組件,那你需要向Spring注入一個 @Primary 的 CacheManager bean

@Primary

@Bean("deaultCacheManager")

public RedisCacheManager cacheManager(RedisConnectionFactory factory) {

// 生成一個默認配置,通過config對象即可對緩存進行自定義配置

RedisCacheConfiguration config = RedisCacheConfiguration.defaultCacheConfig();

// 設置緩存的默認過期時間,也是使用Duration設置

config = config.entryTtl(Duration.ofMinutes(2)).disableCachingNullValues();

// 設置一個初始化的緩存空間set集合

Set<String> cacheNames = new HashSet<>();

cacheNames.add(CacheNames.CACHE_15MINS);

cacheNames.add(CacheNames.CACHE_30MINS);

// 對每個緩存空間應用不同的配置

Map<String, RedisCacheConfiguration> configMap = new HashMap<>();

configMap.put(CacheNames.CACHE_15MINS, config.entryTtl(Duration.ofMinutes(15)));

configMap.put(CacheNames.CACHE_30MINS, config.entryTtl(Duration.ofMinutes(30)));

// 使用自定義的緩存配置初始化一個cacheManager

RedisCacheManager cacheManager = RedisCacheManager.builder(factory)

.initialCacheNames(cacheNames) // 注意這兩句的調用順序,一定要先調用該方法設置初始化的緩存名,再初始化相關的配置

.withInitialCacheConfigurations(configMap)

.build();

return cacheManager;

}然后:

//這個方法會使用分布式二級緩存

@Cacheable(cacheNames = CacheNames.CACHE_12HOUR, cacheManager = "L2_CacheManager")

public Config getAllValidateConfig() {

}

//這個方法會使用分布式緩存

@Cacheable(cacheNames = CacheNames.CACHE_12HOUR)

public Config getAllValidateConfig2() {

}核心其實就是實現 org.springframework.cache.CacheManager接口與繼承org.springframework.cache.support.AbstractValueAdaptingCache,在Spring緩存框架下實現緩存的讀與寫。

RedisCaffeineCacheManager實現CacheManager 接口

RedisCaffeineCacheManager.class 主要來管理緩存實例,根據不同的 CacheNames 生成對應的緩存管理bean,然后放入一個map中。

package com.axin.idea.rediscaffeinecachestarter.support;

import com.axin.idea.rediscaffeinecachestarter.CacheRedisCaffeineProperties;

import com.github.benmanes.caffeine.cache.Caffeine;

import com.github.benmanes.caffeine.cache.stats.CacheStats;

import lombok.extern.slf4j.Slf4j;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.cache.Cache;

import org.springframework.cache.CacheManager;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.util.CollectionUtils;

import java.util.*;

import java.util.concurrent.ConcurrentHashMap;

import java.util.concurrent.ConcurrentMap;

import java.util.concurrent.TimeUnit;

@Slf4j

public class RedisCaffeineCacheManager implements CacheManager {

private final Logger logger = LoggerFactory.getLogger(RedisCaffeineCacheManager.class);

private static ConcurrentMap<String, Cache> cacheMap = new ConcurrentHashMap<String, Cache>();

private CacheRedisCaffeineProperties cacheRedisCaffeineProperties;

private RedisTemplate<Object, Object> stringKeyRedisTemplate;

private boolean dynamic = true;

private Set<String> cacheNames;

{

cacheNames = new HashSet<>();

cacheNames.add(CacheNames.CACHE_15MINS);

cacheNames.add(CacheNames.CACHE_30MINS);

cacheNames.add(CacheNames.CACHE_60MINS);

cacheNames.add(CacheNames.CACHE_180MINS);

cacheNames.add(CacheNames.CACHE_12HOUR);

}

public RedisCaffeineCacheManager(CacheRedisCaffeineProperties cacheRedisCaffeineProperties,

RedisTemplate<Object, Object> stringKeyRedisTemplate) {

super();

this.cacheRedisCaffeineProperties = cacheRedisCaffeineProperties;

this.stringKeyRedisTemplate = stringKeyRedisTemplate;

this.dynamic = cacheRedisCaffeineProperties.isDynamic();

}

//——————————————————————— 進行緩存工具 ——————————————————————

/**

* 清除所有進程緩存

*/

public void clearAllCache() {

stringKeyRedisTemplate.convertAndSend(cacheRedisCaffeineProperties.getRedis().getTopic(), new CacheMessage(null, null));

}

/**

* 返回所有進程緩存(二級緩存)的統計信息

* result:{"緩存名稱":統計信息}

* @return

*/

public static Map<String, CacheStats> getCacheStats() {

if (CollectionUtils.isEmpty(cacheMap)) {

return null;

}

Map<String, CacheStats> result = new LinkedHashMap<>();

for (Cache cache : cacheMap.values()) {

RedisCaffeineCache caffeineCache = (RedisCaffeineCache) cache;

result.put(caffeineCache.getName(), caffeineCache.getCaffeineCache().stats());

}

return result;

}

//—————————————————————————— core —————————————————————————

@Override

public Cache getCache(String name) {

Cache cache = cacheMap.get(name);

if(cache != null) {

return cache;

}

if(!dynamic && !cacheNames.contains(name)) {

return null;

}

cache = new RedisCaffeineCache(name, stringKeyRedisTemplate, caffeineCache(name), cacheRedisCaffeineProperties);

Cache oldCache = cacheMap.putIfAbsent(name, cache);

logger.debug("create cache instance, the cache name is : {}", name);

return oldCache == null ? cache : oldCache;

}

@Override

public Collection<String> getCacheNames() {

return this.cacheNames;

}

public void clearLocal(String cacheName, Object key) {

//cacheName為null 清除所有進程緩存

if (cacheName == null) {

log.info("清除所有本地緩存");

cacheMap = new ConcurrentHashMap<>();

return;

}

Cache cache = cacheMap.get(cacheName);

if(cache == null) {

return;

}

RedisCaffeineCache redisCaffeineCache = (RedisCaffeineCache) cache;

redisCaffeineCache.clearLocal(key);

}

/**

* 實例化本地一級緩存

* @param name

* @return

*/

private com.github.benmanes.caffeine.cache.Cache<Object, Object> caffeineCache(String name) {

Caffeine<Object, Object> cacheBuilder = Caffeine.newBuilder();

CacheRedisCaffeineProperties.CacheDefault cacheConfig;

switch (name) {

case CacheNames.CACHE_15MINS:

cacheConfig = cacheRedisCaffeineProperties.getCache15m();

break;

case CacheNames.CACHE_30MINS:

cacheConfig = cacheRedisCaffeineProperties.getCache30m();

break;

case CacheNames.CACHE_60MINS:

cacheConfig = cacheRedisCaffeineProperties.getCache60m();

break;

case CacheNames.CACHE_180MINS:

cacheConfig = cacheRedisCaffeineProperties.getCache180m();

break;

case CacheNames.CACHE_12HOUR:

cacheConfig = cacheRedisCaffeineProperties.getCache12h();

break;

default:

cacheConfig = cacheRedisCaffeineProperties.getCacheDefault();

}

long expireAfterAccess = cacheConfig.getExpireAfterAccess();

long expireAfterWrite = cacheConfig.getExpireAfterWrite();

int initialCapacity = cacheConfig.getInitialCapacity();

long maximumSize = cacheConfig.getMaximumSize();

long refreshAfterWrite = cacheConfig.getRefreshAfterWrite();

log.debug("本地緩存初始化:");

if (expireAfterAccess > 0) {

log.debug("設置本地緩存訪問后過期時間,{}秒", expireAfterAccess);

cacheBuilder.expireAfterAccess(expireAfterAccess, TimeUnit.SECONDS);

}

if (expireAfterWrite > 0) {

log.debug("設置本地緩存寫入后過期時間,{}秒", expireAfterWrite);

cacheBuilder.expireAfterWrite(expireAfterWrite, TimeUnit.SECONDS);

}

if (initialCapacity > 0) {

log.debug("設置緩存初始化大小{}", initialCapacity);

cacheBuilder.initialCapacity(initialCapacity);

}

if (maximumSize > 0) {

log.debug("設置本地緩存最大值{}", maximumSize);

cacheBuilder.maximumSize(maximumSize);

}

if (refreshAfterWrite > 0) {

cacheBuilder.refreshAfterWrite(refreshAfterWrite, TimeUnit.SECONDS);

}

cacheBuilder.recordStats();

return cacheBuilder.build();

}

}RedisCaffeineCache 繼承 AbstractValueAdaptingCache

核心是get方法與put方法。

package com.axin.idea.rediscaffeinecachestarter.support;

import com.axin.idea.rediscaffeinecachestarter.CacheRedisCaffeineProperties;

import com.github.benmanes.caffeine.cache.Cache;

import lombok.Getter;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.cache.support.AbstractValueAdaptingCache;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.util.StringUtils;

import java.time.Duration;

import java.util.HashMap;

import java.util.Map;

import java.util.Set;

import java.util.concurrent.Callable;

import java.util.concurrent.ConcurrentHashMap;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.locks.ReentrantLock;

public class RedisCaffeineCache extends AbstractValueAdaptingCache {

private final Logger logger = LoggerFactory.getLogger(RedisCaffeineCache.class);

private String name;

private RedisTemplate<Object, Object> redisTemplate;

@Getter

private Cache<Object, Object> caffeineCache;

private String cachePrefix;

/**

* 默認key超時時間 3600s

*/

private long defaultExpiration = 3600;

private Map<String, Long> defaultExpires = new HashMap<>();

{

defaultExpires.put(CacheNames.CACHE_15MINS, TimeUnit.MINUTES.toSeconds(15));

defaultExpires.put(CacheNames.CACHE_30MINS, TimeUnit.MINUTES.toSeconds(30));

defaultExpires.put(CacheNames.CACHE_60MINS, TimeUnit.MINUTES.toSeconds(60));

defaultExpires.put(CacheNames.CACHE_180MINS, TimeUnit.MINUTES.toSeconds(180));

defaultExpires.put(CacheNames.CACHE_12HOUR, TimeUnit.HOURS.toSeconds(12));

}

private String topic;

private Map<String, ReentrantLock> keyLockMap = new ConcurrentHashMap();

protected RedisCaffeineCache(boolean allowNullValues) {

super(allowNullValues);

}

public RedisCaffeineCache(String name, RedisTemplate<Object, Object> redisTemplate,

Cache<Object, Object> caffeineCache, CacheRedisCaffeineProperties cacheRedisCaffeineProperties) {

super(cacheRedisCaffeineProperties.isCacheNullValues());

this.name = name;

this.redisTemplate = redisTemplate;

this.caffeineCache = caffeineCache;

this.cachePrefix = cacheRedisCaffeineProperties.getCachePrefix();

this.defaultExpiration = cacheRedisCaffeineProperties.getRedis().getDefaultExpiration();

this.topic = cacheRedisCaffeineProperties.getRedis().getTopic();

defaultExpires.putAll(cacheRedisCaffeineProperties.getRedis().getExpires());

}

@Override

public String getName() {

return this.name;

}

@Override

public Object getNativeCache() {

return this;

}

@Override

public <T> T get(Object key, Callable<T> valueLoader) {

Object value = lookup(key);

if (value != null) {

return (T) value;

}

//key在redis和緩存中均不存在

ReentrantLock lock = keyLockMap.get(key.toString());

if (lock == null) {

logger.debug("create lock for key : {}", key);

keyLockMap.putIfAbsent(key.toString(), new ReentrantLock());

lock = keyLockMap.get(key.toString());

}

try {

lock.lock();

value = lookup(key);

if (value != null) {

return (T) value;

}

//執行原方法獲得value

value = valueLoader.call();

Object storeValue = toStoreValue(value);

put(key, storeValue);

return (T) value;

} catch (Exception e) {

throw new ValueRetrievalException(key, valueLoader, e.getCause());

} finally {

lock.unlock();

}

}

@Override

public void put(Object key, Object value) {

if (!super.isAllowNullValues() && value == null) {

this.evict(key);

return;

}

long expire = getExpire();

logger.debug("put:{},expire:{}", getKey(key), expire);

redisTemplate.opsForValue().set(getKey(key), toStoreValue(value), expire, TimeUnit.SECONDS);

//緩存變更時通知其他節點清理本地緩存

push(new CacheMessage(this.name, key));

//此處put沒有意義,會收到自己發送的緩存key失效消息

// caffeineCache.put(key, value);

}

@Override

public ValueWrapper putIfAbsent(Object key, Object value) {

Object cacheKey = getKey(key);

// 使用setIfAbsent原子性操作

long expire = getExpire();

boolean setSuccess;

setSuccess = redisTemplate.opsForValue().setIfAbsent(getKey(key), toStoreValue(value), Duration.ofSeconds(expire));

Object hasValue;

//setNx結果

if (setSuccess) {

push(new CacheMessage(this.name, key));

hasValue = value;

}else {

hasValue = redisTemplate.opsForValue().get(cacheKey);

}

caffeineCache.put(key, toStoreValue(value));

return toValueWrapper(hasValue);

}

@Override

public void evict(Object key) {

// 先清除redis中緩存數據,然后清除caffeine中的緩存,避免短時間內如果先清除caffeine緩存后其他請求會再從redis里加載到caffeine中

redisTemplate.delete(getKey(key));

push(new CacheMessage(this.name, key));

caffeineCache.invalidate(key);

}

@Override

public void clear() {

// 先清除redis中緩存數據,然后清除caffeine中的緩存,避免短時間內如果先清除caffeine緩存后其他請求會再從redis里加載到caffeine中

Set<Object> keys = redisTemplate.keys(this.name.concat(":*"));

for (Object key : keys) {

redisTemplate.delete(key);

}

push(new CacheMessage(this.name, null));

caffeineCache.invalidateAll();

}

/**

* 取值邏輯

* @param key

* @return

*/

@Override

protected Object lookup(Object key) {

Object cacheKey = getKey(key);

Object value = caffeineCache.getIfPresent(key);

if (value != null) {

logger.debug("從本地緩存中獲得key, the key is : {}", cacheKey);

return value;

}

value = redisTemplate.opsForValue().get(cacheKey);

if (value != null) {

logger.debug("從redis中獲得值,將值放到本地緩存中, the key is : {}", cacheKey);

caffeineCache.put(key, value);

}

return value;

}

/**

* @description 清理本地緩存

*/

public void clearLocal(Object key) {

logger.debug("clear local cache, the key is : {}", key);

if (key == null) {

caffeineCache.invalidateAll();

} else {

caffeineCache.invalidate(key);

}

}

//————————————————————————————私有方法——————————————————————————

private Object getKey(Object key) {

String keyStr = this.name.concat(":").concat(key.toString());

return StringUtils.isEmpty(this.cachePrefix) ? keyStr : this.cachePrefix.concat(":").concat(keyStr);

}

private long getExpire() {

long expire = defaultExpiration;

Long cacheNameExpire = defaultExpires.get(this.name);

return cacheNameExpire == null ? expire : cacheNameExpire.longValue();

}

/**

* @description 緩存變更時通知其他節點清理本地緩存

*/

private void push(CacheMessage message) {

redisTemplate.convertAndSend(topic, message);

}

}關于“Redis+Caffeine如何實現分布式二級緩存組件”的內容就介紹到這里了,感謝大家的閱讀。如果想了解更多行業相關的知識,可以關注億速云行業資訊頻道,小編每天都會為大家更新不同的知識點。

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。