您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

本篇文章為大家展示了elasticsearch 5.x中IK分詞器怎么用,內容簡明扼要并且容易理解,絕對能使你眼前一亮,通過這篇文章的詳細介紹希望你能有所收獲。

ik分詞器的地址 https://github.com/medcl/elasticsearch-analysis-ik/releases ,分詞器插件需要和ES版本匹配

由于es是5.6.16版本,所有我們下載5.6.16

https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v5.6.16/elasticsearch-analysis-ik-5.6.16.zip

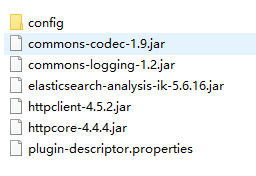

解壓后,把安裝包放在ES節點的plugins目錄,包名重命名為ik

重啟ES,測試下IK分詞效果

(1)無分詞器下的效果

GET _analyze?pretty

{

"text":"安徽省長江流域"

}返回結果。

{

"tokens": [

{

"token": "安",

"start_offset": 0,

"end_offset": 1,

"type": "<IDEOGRAPHIC>",

"position": 0

},

{

"token": "徽",

"start_offset": 1,

"end_offset": 2,

"type": "<IDEOGRAPHIC>",

"position": 1

},

{

"token": "省",

"start_offset": 2,

"end_offset": 3,

"type": "<IDEOGRAPHIC>",

"position": 2

},

{

"token": "長",

"start_offset": 3,

"end_offset": 4,

"type": "<IDEOGRAPHIC>",

"position": 3

},

{

"token": "江",

"start_offset": 4,

"end_offset": 5,

"type": "<IDEOGRAPHIC>",

"position": 4

},

{

"token": "流",

"start_offset": 5,

"end_offset": 6,

"type": "<IDEOGRAPHIC>",

"position": 5

},

{

"token": "域",

"start_offset": 6,

"end_offset": 7,

"type": "<IDEOGRAPHIC>",

"position": 6

}

]

}可見 “安徽省長江流域” 每個字都分成了一個詞

(2)IK分詞器下的效果,ik_smart分詞器

GET _analyze?pretty

{

"analyzer": "ik_smart",

"text":"安徽省長江流域"

}結果

{

"tokens": [

{

"token": "安徽省",

"start_offset": 0,

"end_offset": 3,

"type": "CN_WORD",

"position": 0

},

{

"token": "長江流域",

"start_offset": 3,

"end_offset": 7,

"type": "CN_WORD",

"position": 1

}

]

}(3)IK分詞器下的效果,ik_smart分詞器

GET _analyze?pretty

{

"analyzer": "ik_max_word",

"text":"安徽省長江流域"

}結果

{

"tokens": [

{

"token": "安徽省",

"start_offset": 0,

"end_offset": 3,

"type": "CN_WORD",

"position": 0

},

{

"token": "安徽",

"start_offset": 0,

"end_offset": 2,

"type": "CN_WORD",

"position": 1

},

{

"token": "省長",

"start_offset": 2,

"end_offset": 4,

"type": "CN_WORD",

"position": 2

},

{

"token": "長江流域",

"start_offset": 3,

"end_offset": 7,

"type": "CN_WORD",

"position": 3

},

{

"token": "長江",

"start_offset": 3,

"end_offset": 5,

"type": "CN_WORD",

"position": 4

},

{

"token": "江流",

"start_offset": 4,

"end_offset": 6,

"type": "CN_WORD",

"position": 5

},

{

"token": "流域",

"start_offset": 5,

"end_offset": 7,

"type": "CN_WORD",

"position": 6

}

]

}為什么IK分詞器能分析中文詞匯呢?因為在它的config目錄內置了一些詞典。

那么如果我們需要識別一些新的詞匯怎么辦?例如一部連續劇 “權利的游戲”

自定義詞典

在IK插件的config目錄下創建tv目錄,新建 tv.dic 文件(注意,一定要UTF-8無BOM的格式)

然后在 IKAnalyzer.cfg.xml 文件在添加配置

重啟ES、Kibana ,試下效果

GET _analyze?pretty

{

"analyzer": "ik_smart",

"text":"權利的游戲"

}分詞結果

{

"tokens": [

{

"token": "權利的游戲",

"start_offset": 0,

"end_offset": 5,

"type": "CN_WORD",

"position": 0

}

]

}上述內容就是elasticsearch 5.x中IK分詞器怎么用,你們學到知識或技能了嗎?如果還想學到更多技能或者豐富自己的知識儲備,歡迎關注億速云行業資訊頻道。

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。