您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

這篇文章主要講解了“Hadoop中的MultipleOutput實例使用”,文中的講解內容簡單清晰,易于學習與理解,下面請大家跟著小編的思路慢慢深入,一起來研究和學習“Hadoop中的MultipleOutput實例使用”吧!

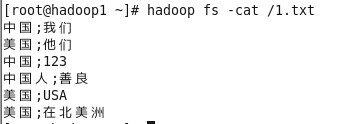

原數據:

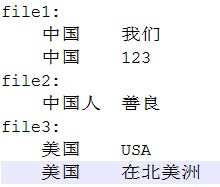

預想處理后的結果:

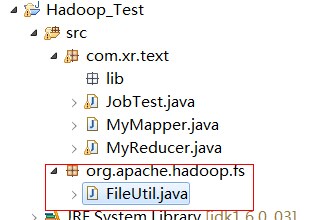

MyMapper.java

package com.xr.text;

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class MyMapper extends Mapper<LongWritable, Text, Text, Text> {

protected void map(LongWritable key, Text value,Context context)

throws IOException, InterruptedException {

String[] split = value.toString().split(";");

context.write(new Text(split[0]), new Text(split[1]));

}

}MyReducer.java

package com.xr.text;

import java.io.IOException;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.output.MultipleOutputs;

public class MyReducer extends Reducer<Text, Text, Text, Text> {

private MultipleOutputs mos;

/**

* start before set MultipleOutputs;

*/

protected void setup(Context context) throws IOException,

InterruptedException {

mos = new MultipleOutputs(context);

}

protected void reduce(Text k1, Iterable<Text> value,Context context)

throws IOException, InterruptedException {

String key = k1.toString();

for(Text t : value){

if("中國".equals(key)){

mos.write("china",new Text("中國"), t);

}else if("美國".equals(key)){

mos.write("usa",new Text("美國"),t);

}else if("中國人".equals(key)){

mos.write("cpeople",new Text("中國人"),t);

}

}

}

/**

* close MultipleOutputs;

*/

protected void cleanup(Context context)

throws IOException, InterruptedException {

mos.close();

}

}JobTest.java

package com.xr.text;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.MultipleOutputs;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

public class JobTest {

public static void main(String[] args) throws IOException, InterruptedException, ClassNotFoundException {

String inputPath = "hdfs://192.168.75.100:9000/1.txt";

String outputPath = "hdfs://192.168.75.100:9000/ceshi";

Job job = new Job();

job.setJarByClass(JobTest.class);

job.setMapperClass(MyMapper.class);

/**

* set MultipleOutput file name

*/

MultipleOutputs.addNamedOutput(job, "china", TextOutputFormat.class, Text.class, Text.class);

MultipleOutputs.addNamedOutput(job, "usa", TextOutputFormat.class, Text.class, Text.class);

MultipleOutputs.addNamedOutput(job, "cpeople", TextOutputFormat.class, Text.class, Text.class);

job.setReducerClass(MyReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileInputFormat.setInputPaths(job, new Path(inputPath));

// Configuration conf = new Configuration();

// FileSystem fs = FileSystem.get(conf);

//

// if(fs.exists(new Path(outputPath))){

// fs.delete(new Path(outputPath), true);

// }

FileOutputFormat.setOutputPath(job, new Path(outputPath));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}運行過程中報錯:

14/08/12 12:44:02 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

14/08/12 12:44:02 ERROR security.UserGroupInformation: PriviledgedActionException as:Xr cause:java.io.IOException: Failed to set permissions of path: \tmp\hadoop-Xr\mapred\staging\Xr-1514460710\.staging to 0700

Exception in thread "main">java.io.IOException: Failed to set permissions of path: \tmp\hadoop-Xr\mapred\staging\Xr-1514460710\.staging to 0700

at org.apache.hadoop.fs.FileUtil.checkReturnValue(FileUtil.java:689)

at org.apache.hadoop.fs.FileUtil.setPermission(FileUtil.java:662)

at org.apache.hadoop.fs.RawLocalFileSystem.setPermission(RawLocalFileSystem.java:509)

at org.apache.hadoop.fs.RawLocalFileSystem.mkdirs(RawLocalFileSystem.java:344)

at org.apache.hadoop.fs.FilterFileSystem.mkdirs(FilterFileSystem.java:189)

at org.apache.hadoop.mapreduce.JobSubmissionFiles.getStagingDir(JobSubmissionFiles.java:116)

at org.apache.hadoop.mapred.JobClient$2.run(JobClient.java:918)

at org.apache.hadoop.mapred.JobClient$2.run(JobClient.java:912)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:396)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1149)

at org.apache.hadoop.mapred.JobClient.submitJobInternal(JobClient.java:912)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:500)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:530)

at com.xr.text.JobTest.main(JobTest.java:37)

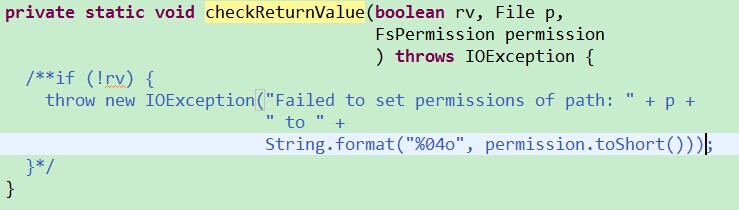

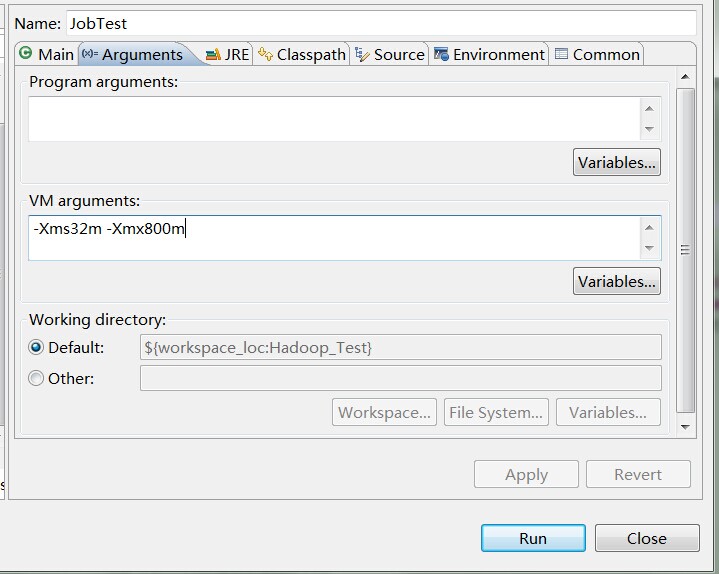

錯誤解決方案:

1. 把hadoop-core-1.1.2.jar中的FileUtil.class刪除.

2. 再把/org/apache/hadoop/fs/FileUtil.java從源碼中copy出來

3. 注釋checkReturnValue()方法

運行時再次報錯:

java.lang.OutOfMemoryError: Java heap space

解決方案:

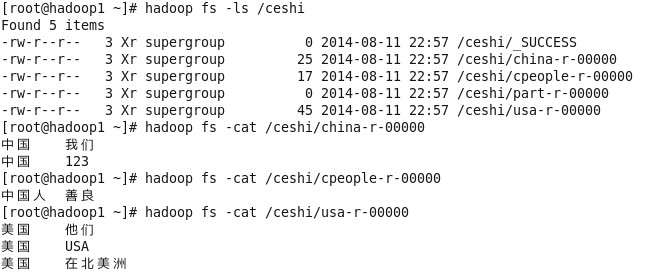

ok,job順利執行。

生成以下文件:

感謝各位的閱讀,以上就是“Hadoop中的MultipleOutput實例使用”的內容了,經過本文的學習后,相信大家對Hadoop中的MultipleOutput實例使用這一問題有了更深刻的體會,具體使用情況還需要大家實踐驗證。這里是億速云,小編將為大家推送更多相關知識點的文章,歡迎關注!

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。