您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

本章主要是搜集了一些安裝rac的過程中出現的問題及解決辦法,如果沒有出現問題的話那么這一章可以不看的

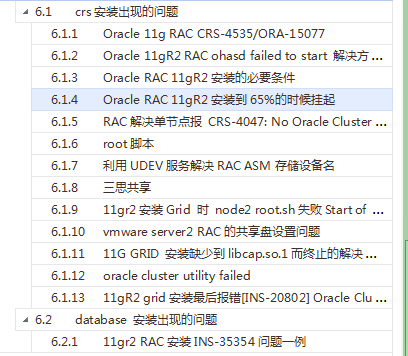

目錄結構:

新安裝了Oracle 11g rac之后,不知道是什么原因導致第二個節點上的crsd無法啟動?其錯誤消息是CRS-4535: Cannot communicate with Cluster Ready Services。其具體的錯誤信息還需要查看crsd.log日志才知道。

1、環境

[root@linux2 ~]# cat /etc/issue

Enterprise Linux Enterprise Linux Server release 5.5 (Carthage)

Kernel \r on an \m

[root@linux2 bin]# ./crsctl query crs activeversion

Oracle Clusterware active version on the cluster is [11.2.0.1.0]

#注意下文中描述中使用了grid與root用戶操作不同的對象。

2、錯誤癥狀

[root@linux2 bin]# ./crsctl check crs

CRS-4638: Oracle High Availability Services is online

CRS-4535: Cannot communicate with Cluster Ready Services #CRS-4535

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

[root@linux2 bin]# ps -ef | grep d.bin #下面的查詢中沒有crsd.bin

root 3886 1 1 09:50 ? 00:00:11 /u01/app/11.2.0/grid/bin/ohasd.bin reboot

grid 3938 1 0 09:51 ? 00:00:04 /u01/app/11.2.0/grid/bin/oraagent.bin

grid 4009 1 0 09:51 ? 00:00:00 /u01/app/11.2.0/grid/bin/gipcd.bin

grid 4014 1 0 09:51 ? 00:00:00 /u01/app/11.2.0/grid/bin/mdnsd.bin

grid 4028 1 0 09:51 ? 00:00:02 /u01/app/11.2.0/grid/bin/gpnpd.bin

root 4040 1 0 09:51 ? 00:00:03 /u01/app/11.2.0/grid/bin/cssdmonitor

root 4058 1 0 09:51 ? 00:00:04 /u01/app/11.2.0/grid/bin/cssdagent

root 4060 1 0 09:51 ? 00:00:00 /u01/app/11.2.0/grid/bin/orarootagent.bin

grid 4090 1 2 09:51 ? 00:00:15 /u01/app/11.2.0/grid/bin/ocssd.bin

grid 4094 1 0 09:51 ? 00:00:02 /u01/app/11.2.0/grid/bin/diskmon.bin -d -f

root 4928 1 0 09:51 ? 00:00:00 /u01/app/11.2.0/grid/bin/octssd.bin reboot

grid 4945 1 0 09:51 ? 00:00:02 /u01/app/11.2.0/grid/bin/evmd.bin

root 6514 5886 0 10:00 pts/1 00:00:00 grep d.bin

[root@linux2 bin]# ./crsctl stat res -t -init

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.asm

1 ONLINE ONLINE linux2 Cluster Reconfigura

tion

ora.crsd

1 ONLINE OFFLINE #crsd處于offline狀態

ora.cssd

1 ONLINE ONLINE linux2

ora.cssdmonitor

1 ONLINE ONLINE linux2

ora.ctssd

1 ONLINE ONLINE linux2 OBSERVER

ora.diskmon

1 ONLINE ONLINE linux2

ora.drivers.acfs

1 ONLINE OFFLINE #acfs處于offline狀態

ora.evmd

1 ONLINE ONLINE linux2

ora.gipcd

1 ONLINE ONLINE linux2

ora.gpnpd

1 ONLINE ONLINE linux2

ora.mdnsd

1 ONLINE ONLINE linux2

#下面查看crsd對應的日志文件

[grid@linux2 ~]$ view $ORACLE_HOME/log/linux2/crsd/crsd.log

2013-01-05 10:28:27.107: [GIPCXCPT][1768145488] gipcShutdownF: skipping shutdown, count 1, from [ clsgpnp0.c : 1021],

ret gipcretSuccess (0)

2013-01-05 10:28:27.107: [ OCRASM][1768145488]proprasmo: Error in open/create file in dg [OCR_VOTE] #打開磁盤組錯誤

[ OCRASM][1768145488]SLOS : SLOS: cat=7, opn=kgfoAl06, dep=15077, loc=kgfokge

ORA-15077: could not locate ASM instance serving a required diskgroup #出現了ORA錯誤

2013-01-05 10:28:27.107: [ OCRASM][1768145488]proprasmo: kgfoCheckMount returned [7]

2013-01-05 10:28:27.107: [ OCRASM][1768145488]proprasmo: The ASM instance is down #實例處于關閉狀態

2013-01-05 10:28:27.107: [ OCRRAW][1768145488]proprioo: Failed to open [+OCR_VOTE]. Returned proprasmo() with [26].

Marking location as UNAVAILABLE.

2013-01-05 10:28:27.107: [ OCRRAW][1768145488]proprioo: No OCR/OLR devices are usable #OCR/OLR設備不可用

2013-01-05 10:28:27.107: [ OCRASM][1768145488]proprasmcl: asmhandle is NULL

2013-01-05 10:28:27.107: [ OCRRAW][1768145488]proprinit: Could not open raw device

2013-01-05 10:28:27.107: [ OCRASM][1768145488]proprasmcl: asmhandle is NULL

2013-01-05 10:28:27.107: [ OCRAPI][1768145488]a_init:16!: Backend init unsuccessful : [26]

2013-01-05 10:28:27.107: [ CRSOCR][1768145488] OCR context init failure. Error: PROC-26: Error while accessing the

physical storage ASM error [SLOS: cat=7, opn=kgfoAl06, dep=15077, loc=kgfokge

ORA-15077: could not locate ASM instance serving a required diskgroup

] [7]

2013-01-05 10:28:27.107: [ CRSD][1768145488][PANIC] CRSD exiting: Could not init OCR, code: 26

2013-01-05 10:28:27.107: [ CRSD][1768145488] Done.

[root@linux2 bin]# ps -ef | grep pmon #查看pmon進程,此處也表明ASM實例沒有啟動

root 7447 7184 0 10:48 pts/2 00:00:00 grep pmon

#從上面的分析可知,應該是ASM實例沒有啟動的原因導致了crsd進程無法啟動

3、解決

[grid@linux2 ~]$ asmcmd

Connected to an idle instance.

ASMCMD> startup #啟動asm實例

ASM instance started

Total System Global Area 283930624 bytes

Fixed Size 2212656 bytes

Variable Size 256552144 bytes

ASM Cache 25165824 bytes

ASM diskgroups mounted

ASMCMD> exit

#Author : Robinson

#Blog : http://blog.csdn.net/robinson_0612

#再次查看集群資源的狀態

[root@linux2 bin]# ./crsctl stat res -t -init

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.asm

1 ONLINE ONLINE linux2 Started

ora.crsd

1 ONLINE INTERMEDIATE linux2

ora.cssd

1 ONLINE ONLINE linux2

ora.cssdmonitor

1 ONLINE ONLINE linux2

ora.ctssd

1 ONLINE ONLINE linux2 OBSERVER

ora.diskmon

1 ONLINE ONLINE linux2

ora.drivers.acfs

1 ONLINE OFFLINE

ora.evmd

1 ONLINE ONLINE linux2

ora.gipcd

1 ONLINE ONLINE linux2

ora.gpnpd

1 ONLINE ONLINE linux2

ora.mdnsd

1 ONLINE ONLINE linux2

#啟動acfs

[root@linux2 bin]# ./crsctl start res ora.drivers.acfs -init

CRS-2672: Attempting to start 'ora.drivers.acfs' on 'linux2'

CRS-2676: Start of 'ora.drivers.acfs' on 'linux2' succeeded

#之后所有的狀態都處于online狀態

[root@linux2 bin]# ./crsctl stat res -t -init

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.asm

1 ONLINE ONLINE linux2 Started

ora.crsd

1 ONLINE ONLINE linux2

ora.cssd

1 ONLINE ONLINE linux2

ora.cssdmonitor

1 ONLINE ONLINE linux2

ora.ctssd

1 ONLINE ONLINE linux2 OBSERVER

ora.diskmon

1 ONLINE ONLINE linux2

ora.drivers.acfs

1 ONLINE ONLINE linux2

ora.evmd

1 ONLINE ONLINE linux2

ora.gipcd

1 ONLINE ONLINE linux2

ora.gpnpd

1 ONLINE ONLINE linux2

ora.mdnsd

1 ONLINE ONLINE linux2

CRS-4124: Oracle High Availability Services startup failed.

CRS-4000: Command Start failed, or completed with errors.

ohasd failed to start: Inappropriate ioctl for device

ohasd failed to start at/u01/app/11.2.0/grid/crs/install/rootcrs.pl line 443.

第一次安裝11gR2 RAC的時候就遇到了這個11.0.2.1的經典問題,上網一查才知道這是個bug,解決辦法也很簡單,

就是在執行root.sh之前執行以下命令

/bin/dd if=/var/tmp/.oracle/npohasd of=/dev/null bs=1024 count=1

如果出現

/bin/dd: opening`/var/tmp/.oracle/npohasd': No such file or directory

的時候文件還沒生成就繼續執行,直到能執行為止,一般出現Adding daemon to inittab這條信息的時候執行dd命令。

另外還有一種解決方法就是更改文件權限

chown root:oinstall /var/tmp/.oracle/npohasd

重新執行root.sh之前別忘了刪除配置:/u01/app/11.2.0/grid/crs/install/roothas.pl -deconfig -force-verbose

其中一個必要條件是網卡要一致,一致性表現在這幾個方面

1. 網卡名要一模一樣,比如都叫eth0, eth2,不能出現一臺節點eth0,eth2, 另一臺eth3, eth4

我在安裝的時候就出現這種錯誤,造成的現象就是第一臺節點能正常安裝,但是第二臺執行root.sh的時候總是報錯Failed to Start CSSD。

2. 不僅名字要一樣,而且對應的public, private也要一致,也就是說不能一臺

eth0: 192.168.1.2

eth2: 10.10.1.2

另一臺

eth0: 10.10.1.3

eth2: 192.168.1.3

3. 不僅地址要對應,還要求子網掩碼要一致,也就是同一個public,private網絡不能一臺子網掩碼

255.255.0.0

另一臺的子網掩碼是255.255.255.0

采用虛擬機克隆的話,網卡名不一致是最常見的。

Redhat Enterprise Linux 6 下網卡名的修改方法:(比如eth6需要改成eth0)

1. 修改配置文件 /etc/udev/rules.d/70-persistent-net.rules,把其中的網卡名改成新的網卡名

2. 配置文件/etc/sysconfig/network-script/ifcfg-eth6也需要改成ifcfg-eth0

3. /etc/rc.d/init.d/network restart 重新啟動

Oracle RAC的安裝真是麻煩。一旦設置不對,后面就會出現各種錯誤。

補充:建議用同型號的網卡,不一樣行不行沒有試過但是根據資料,至少網卡的MTU(最大傳輸單元)必須要一致,否則也會導致錯誤。

現象:

在Redhat Linux 6上安裝Oracle RAC的過程中,到65%的時候就沒有任何反應。

原因:

因為防火墻開著

解決方法

chkconfig iptables off

service iptables stop

[root@his2 bin]# ./crsctl check crs 檢查服務狀態CRS-4047: No Oracle Clusterware components configured.CRS-4000: Command Check failed, or completed with errors.[root@his2 bin]# ./crsctl stat res -tCRS-4047: No Oracle Clusterware components configured.CRS-4000: Command Status failed, or completed with errors.[root@his2 bin]# ./crs_stat -tCRS-0184: Cannot communicate with the CRS daemon.

/app/grid/product/11.2.0/grid/crs/install/rootcrs.pl -deconfig -force 重置crs注冊表Using configuration parameter file: /app/grid/product/11.2.0/grid/crs/install/crsconfig_paramsNetwork exists: 1/192.168.20.0/255.255.255.0/eth0, type staticVIP exists: /his1-vip/192.168.20.6/192.168.20.0/255.255.255.0/eth0, hosting node his1VIP exists: /his2-vip/192.168.20.7/192.168.20.0/255.255.255.0/eth0, hosting node his2GSD existsONS exists: Local port 6100, remote port 6200, EM port 2016ACFS-9200: SupportedCRS-2673: Attempting to stop 'ora.registry.acfs' on 'his2'CRS-2677: Stop of 'ora.registry.acfs' on 'his2' succeededCRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'his2'CRS-2673: Attempting to stop 'ora.crsd' on 'his2'CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on 'his2'CRS-2673: Attempting to stop 'ora.ORACRS.dg' on 'his2'CRS-2673: Attempting to stop 'ora.crds3db.db' on 'his2'CRS-2677: Stop of 'ora.ORACRS.dg' on 'his2' succeededCRS-2677: Stop of 'ora.crds3db.db' on 'his2' succeededCRS-2673: Attempting to stop 'ora.ORAARCH.dg' on 'his2'CRS-2673: Attempting to stop 'ora.ORADATA.dg' on 'his2'CRS-2677: Stop of 'ora.ORAARCH.dg' on 'his2' succeededCRS-2677: Stop of 'ora.ORADATA.dg' on 'his2' succeededCRS-2673: Attempting to stop 'ora.asm' on 'his2'CRS-2677: Stop of 'ora.asm' on 'his2' succeededCRS-2792: Shutdown of Cluster Ready Services-managed resources on 'his2' has completedCRS-2677: Stop of 'ora.crsd' on 'his2' succeededCRS-2673: Attempting to stop 'ora.ctssd' on 'his2'CRS-2673: Attempting to stop 'ora.evmd' on 'his2'CRS-2673: Attempting to stop 'ora.asm' on 'his2'CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'his2'CRS-2673: Attempting to stop 'ora.mdnsd' on 'his2'CRS-2677: Stop of 'ora.asm' on 'his2' succeededCRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'his2'CRS-2677: Stop of 'ora.evmd' on 'his2' succeededCRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'his2' succeededCRS-2677: Stop of 'ora.mdnsd' on 'his2' succeededCRS-2677: Stop of 'ora.ctssd' on 'his2' succeededCRS-2673: Attempting to stop 'ora.cssd' on 'his2'CRS-2677: Stop of 'ora.cssd' on 'his2' succeededCRS-2673: Attempting to stop 'ora.diskmon' on 'his2'CRS-2673: Attempting to stop 'ora.crf' on 'his2'CRS-2677: Stop of 'ora.diskmon' on 'his2' succeededCRS-2677: Stop of 'ora.crf' on 'his2' succeededCRS-2673: Attempting to stop 'ora.gipcd' on 'his2'CRS-2677: Stop of 'ora.drivers.acfs' on 'his2' succeededCRS-2677: Stop of 'ora.gipcd' on 'his2' succeededCRS-2673: Attempting to stop 'ora.gpnpd' on 'his2'CRS-2677: Stop of 'ora.gpnpd' on 'his2' succeededCRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'his2' has completedCRS-4133: Oracle High Availability Services has been stopped.Successfully deconfigured Oracle clusterware stack on this node[root@his1 ~]# su - grid[grid@his1 ~]$ crs_stat -t 在節點1上面只能看到節點1的服務這說明節點2有問題了Name Type Target State Host ------------------------------------------------------------ora....ER.lsnr ora....er.type ONLINE ONLINE his1 ora....N1.lsnr ora....er.type ONLINE ONLINE his1 ora.ORAARCH.dg ora....up.type ONLINE ONLINE his1 ora.ORACRS.dg ora....up.type ONLINE ONLINE his1 ora.ORADATA.dg ora....up.type ONLINE ONLINE his1 ora.asm ora.asm.type ONLINE ONLINE his1 ora.crds3db.db ora....se.type ONLINE ONLINE his1 ora.cvu ora.cvu.type ONLINE ONLINE his1 ora.gsd ora.gsd.type OFFLINE OFFLINE ora....SM1.asm application ONLINE ONLINE his1 ora....S1.lsnr application ONLINE ONLINE his1 ora.his1.gsd application OFFLINE OFFLINE ora.his1.ons application ONLINE ONLINE his1 ora.his1.vip ora....t1.type ONLINE ONLINE his1 ora....network ora....rk.type ONLINE ONLINE his1 ora.oc4j ora.oc4j.type ONLINE ONLINE his1 ora.ons ora.ons.type ONLINE ONLINE his1 ora....ry.acfs ora....fs.type ONLINE ONLINE his1 ora.scan1.vip ora....ip.type ONLINE ONLINE his1

[grid@his1 ~]$ crsctl stat res -t 在1節點上面只能看到1資源說明節點2有問題了--------------------------------------------------------------------------------NAME TARGET STATE SERVER STATE_DETAILS --------------------------------------------------------------------------------Local Resources--------------------------------------------------------------------------------ora.LISTENER.lsnr ONLINE ONLINE his1 ora.ORAARCH.dg ONLINE ONLINE his1 ora.ORACRS.dg ONLINE ONLINE his1 ora.ORADATA.dg ONLINE ONLINE his1 ora.asm ONLINE ONLINE his1 Started ora.gsd OFFLINE OFFLINE his1 ora.net1.network ONLINE ONLINE his1 ora.ons ONLINE ONLINE his1 ora.registry.acfs ONLINE ONLINE his1 --------------------------------------------------------------------------------Cluster Resources--------------------------------------------------------------------------------ora.LISTENER_SCAN1.lsnr 1 ONLINE ONLINE his1 ora.crds3db.db 1 ONLINE ONLINE his1 Open 2 ONLINE OFFLINE Instance Shutdown ora.cvu 1 ONLINE ONLINE his1 ora.his1.vip 1 ONLINE ONLINE his1 ora.oc4j 1 ONLINE ONLINE his1 ora.scan1.vip 1 ONLINE ONLINE his1

[root@his2 bin]# /app/grid/product/11.2.0/grid/root.sh 執行roo.sh重新配置集群所有服務Running Oracle 11g root script...

The following environment variables are set as: ORACLE_OWNER= grid ORACLE_HOME= /app/grid/product/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]: The contents of "dbhome" have not changed. No need to overwrite.The contents of "oraenv" have not changed. No need to overwrite.The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed byDatabase Configuration Assistant when a database is createdFinished running generic part of root script.Now product-specific root actions will be performed.Using configuration parameter file: /app/grid/product/11.2.0/grid/crs/install/crsconfig_paramsLOCAL ADD MODE Creating OCR keys for user 'root', privgrp 'root'..Operation successful.OLR initialization - successfulAdding daemon to inittabACFS-9200: SupportedACFS-9300: ADVM/ACFS distribution files found.ACFS-9307: Installing requested ADVM/ACFS software.ACFS-9308: Loading installed ADVM/ACFS drivers.ACFS-9321: Creating udev for ADVM/ACFS.ACFS-9323: Creating module dependencies - this may take some time.ACFS-9327: Verifying ADVM/ACFS devices.ACFS-9309: ADVM/ACFS installation correctness verified.CRS-4402: The CSS daemon was started in exclusive mode but found an active CSS daemon on node his1, number 1, and is terminatingAn active cluster was found during exclusive startup, restarting to join the clusterPreparing packages for installation...cvuqdisk-1.0.9-1Configure Oracle Grid Infrastructure for a Cluster ... succeeded

[root@his2 bin]# ./crsctl stat res -t 看結果已經恢復正常--------------------------------------------------------------------------------NAME TARGET STATE SERVER STATE_DETAILS --------------------------------------------------------------------------------Local Resources--------------------------------------------------------------------------------ora.LISTENER.lsnr ONLINE ONLINE his1 ONLINE ONLINE his2 ora.ORAARCH.dg ONLINE ONLINE his1 ONLINE ONLINE his2 ora.ORACRS.dg ONLINE ONLINE his1 ONLINE ONLINE his2 ora.ORADATA.dg ONLINE ONLINE his1 ONLINE ONLINE his2 ora.asm ONLINE ONLINE his1 Started ONLINE ONLINE his2 ora.gsd OFFLINE OFFLINE his1 OFFLINE OFFLINE his2 ora.net1.network ONLINE ONLINE his1 ONLINE ONLINE his2 ora.ons ONLINE ONLINE his1 ONLINE ONLINE his2 ora.registry.acfs ONLINE ONLINE his1 ONLINE ONLINE his2 --------------------------------------------------------------------------------Cluster Resources--------------------------------------------------------------------------------ora.LISTENER_SCAN1.lsnr 1 ONLINE ONLINE his1 ora.crds3db.db 1 ONLINE ONLINE his1 Open 2 ONLINE ONLINE his2 Open ora.cvu 1 ONLINE ONLINE his1 ora.his1.vip 1 ONLINE ONLINE his1 ora.his2.vip 1 ONLINE ONLINE his2 ora.oc4j 1 ONLINE ONLINE his1 ora.scan1.vip 1 ONLINE ONLINE his1 --------------------------------------------

1. When I run the script at the second node, the error info as below:

[root@rac2 ~]# /oracle/app/grid/product/11.2.0/root.sh

Running Oracle 11g root script...

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /oracle/app/grid/product/11.2.0

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /oracle/app/grid/product/11.2.0/crs/install/crsconfig_params

Creating trace directory

LOCAL ADD MODE

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

OLR initialization - successful

Adding daemon to inittab

ACFS-9200: Supported

ACFS-9300: ADVM/ACFS distribution files found.

ACFS-9307: Installing requested ADVM/ACFS software.

ACFS-9308: Loading installed ADVM/ACFS drivers.

ACFS-9321: Creating udev for ADVM/ACFS.

ACFS-9323: Creating module dependencies - this may take some time.

ACFS-9327: Verifying ADVM/ACFS devices.

ACFS-9309: ADVM/ACFS installation correctness verified.

CRS-2672: Attempting to start 'ora.mdnsd' on 'rac2'

CRS-2676: Start of 'ora.mdnsd' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'rac2'

CRS-2676: Start of 'ora.gpnpd' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac2'

CRS-2672: Attempting to start 'ora.gipcd' on 'rac2'

CRS-2676: Start of 'ora.gipcd' on 'rac2' succeeded

CRS-2676: Start of 'ora.cssdmonitor' on 'rac2' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'rac2'

CRS-2672: Attempting to start 'ora.diskmon' on 'rac2'

CRS-2676: Start of 'ora.diskmon' on 'rac2' succeeded

CRS-2676: Start of 'ora.cssd' on 'rac2' succeeded

Disk Group CRS creation failed with the following message:

ORA-15018: diskgroup cannot be created

ORA-15031: disk specification '/dev/oracleasm/disks/CRS3' matches no disks

ORA-15025: could not open disk "/dev/oracleasm/disks/CRS3"

ORA-15056: additional error message

Configuration of ASM ... failed

see asmca logs at /oracle/app/oracle/cfgtoollogs/asmca for details

Did not succssfully configure and start ASM at/oracle/app/grid/product/11.2.0/crs/install/crsconfig_lib.pm line 6464.

/oracle/app/grid/product/11.2.0/perl/bin/perl -I/oracle/app/grid/product/11.2.0/perl/lib -I/oracle/app/grid/product/11.2.0/crs/install /oracle/app/grid/product/11.2.0/crs/install/rootcrs.pl execution failed

[grid@rac2 ~]$ vi oracle/app/oracle/cfgtoollogs/asmca/asmca-110428PM061902.log

……………….

[main] [ 2011-04-28 18:19:38.135 CST ] [UsmcaLogger.logInfo:142] Diskstring in createDG to be updated: '/dev/oracleasm/disks/*'

[main] [ 2011-04-28 18:19:38.136 CST ] [UsmcaLogger.logInfo:142] update param sql ALTER SYSTEM SET asm_diskstring='/dev/oracleasm/disks/*' SID='*'

[main] [ 2011-04-28 18:19:38.262 CST ] [InitParamAttributes.loadDBParams:4450] Checking if SPFILE is used

[main] [ 2011-04-28 18:19:38.276 CST ] [InitParamAttributes.loadDBParams:4461] spParams = [Ljava.lang.String;@1a001ff

[main] [ 2011-04-28 18:19:38.277 CST ] [ASMParameters.loadASMParameters:459] useSPFile=false

[main] [ 2011-04-28 18:19:38.277 CST ] [SQLEngine.doSQLSubstitution:2392] The substituted sql statement:=select count(*) from v$ASM_DISKGROUP where name=upper('CRS')

[main] [ 2011-04-28 18:19:38.423 CST ] [UsmcaLogger.logInfo:142] CREATE DISKGROUP SQL: CREATE DISKGROUP CRS EXTERNAL REDUNDANCY DISK '/dev/oracleasm/disks/CRS1',

'/dev/oracleasm/disks/CRS2',

'/dev/oracleasm/disks/CRS3' ATTRIBUTE 'compatible.asm'='11.2.0.0.0'

[main] [ 2011-04-28 18:19:38.724 CST ] [SQLEngine.done:2167] Done called

[main] [ 2011-04-28 18:19:38.731 CST ] [UsmcaLogger.logException:172] SEVERE:method oracle.sysman.assistants.usmca.backend.USMDiskGroupManager:createDiskGroups

[main] [ 2011-04-28 18:19:38.731 CST ] [UsmcaLogger.logException:173] ORA-15018: diskgroup cannot be created

ORA-15031: disk specification '/dev/oracleasm/disks/CRS3' matches no disks

ORA-15025: could not open disk "/dev/oracleasm/disks/CRS3"

ORA-15056: additional error message

[main] [ 2011-04-28 18:19:38.731 CST ] [UsmcaLogger.logException:174] oracle.sysman.assistants.util.sqlEngine.SQLFatalErrorException: ORA-15018: diskgroup cannot be created

ORA-15031: disk specification '/dev/oracleasm/disks/CRS3' matches no disks

ORA-15025: could not open disk "/dev/oracleasm/disks/CRS3"

ORA-15056: additional error message

oracle.sysman.assistants.util.sqlEngine.SQLEngine.executeImpl(SQLEngine.java:1655)

oracle.sysman.assistants.util.sqlEngine.SQLEngine.executeSql(SQLEngine.java:1903)

oracle.sysman.assistants.usmca.backend.USMDiskGroupManager.createDiskGroups(USMDiskGroupManager.java:236)

oracle.sysman.assistants.usmca.backend.USMDiskGroupManager.createDiskGroups(USMDiskGroupManager.java:121)

oracle.sysman.assistants.usmca.backend.USMDiskGroupManager.createDiskGroupsLocal(USMDiskGroupManager.java:2209)

oracle.sysman.assistants.usmca.backend.USMInstance.configureLocalASM(USMInstance.java:3093)

oracle.sysman.assistants.usmca.service.UsmcaService.configureLocalASM(UsmcaService.java:1047)

oracle.sysman.assistants.usmca.model.UsmcaModel.performConfigureLocalASM(UsmcaModel.java:903)

oracle.sysman.assistants.usmca.model.UsmcaModel.performOperation(UsmcaModel.java:779)

oracle.sysman.assistants.usmca.Usmca.execute(Usmca.java:171)

oracle.sysman.assistants.usmca.Usmca.main(Usmca.java:366)

[main] [ 2011-04-28 18:19:38.732 CST ] [UsmcaLogger.logExit:123] Exiting oracle.sysman.assistants.usmca.backend.USMDiskGroupManager Method : createDiskGroups

[main] [ 2011-04-28 18:19:38.732 CST ] [UsmcaLogger.logInfo:142] Diskgroups created

[main] [ 2011-04-28 18:19:38.733 CST ] [UsmcaLogger.logInfo:142] Diskgroup creation is not successful.

[main] [ 2011-04-28 18:19:38.733 CST ] [UsmcaLogger.logExit:123] Exiting oracle.sysman.assistants.usmca.model.UsmcaModel Method : performConfigureLocalASM

[main] [ 2011-04-28 18:19:38.733 CST ] [UsmcaLogger.logExit:123] Exiting oracle.sysman.assistants.usmca.model.UsmcaModel Method : performOperation

Solution:

Add permission to the /dev/oraclease for user grid:

[root@rac2 ~]# chown -R grid.oinstall /dev/oracleasm

[root@rac2 ~]# chmod -R 775 /dev/oracleasm

2. The ora.asm cannot run at rac2

[grid@rac2 ~]$ crsctl status resource -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.CRS.dg

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.asm

ONLINE ONLINE rac1 Started

ONLINE ONLINE rac2

ora.gsd

OFFLINE OFFLINE rac1

OFFLINE OFFLINE rac2

ora.net1.network

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.ons

ONLINE ONLINE rac1

ONLINE ONLINE rac2

ora.registry.acfs

ONLINE ONLINE rac1

ONLINE ONLINE rac2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac1

ora.cvu

1 ONLINE ONLINE rac1

ora.oc4j

1 ONLINE ONLINE rac1

ora.rac1.vip

1 ONLINE ONLINE rac1

ora.rac2.vip

1 ONLINE ONLINE rac2

ora.scan1.vip

1 ONLINE ONLINE rac1

[root@rac2 ~]# /oracle/app/grid/product/11.2.0/crs/install/rootcrs.pl -verbose -deconfig -force -lastnode

Using configuration parameter file: /oracle/app/grid/product/11.2.0/crs/install/crsconfig_params

Network exists: 1/10.157.45.0/255.255.255.0/eth0, type static

VIP exists: /rac1vip/10.157.45.174/10.157.45.0/255.255.255.0/eth0, hosting node rac1

VIP exists: /rac2vip/10.157.45.157/10.157.45.0/255.255.255.0/eth0, hosting node rac2

GSD exists

ONS exists: Local port 6100, remote port 6200, EM port 2016

ACFS-9200: Supported

CRS-2673: Attempting to stop 'ora.registry.acfs' on 'rac2'

CRS-2677: Stop of 'ora.registry.acfs' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.crsd' on 'rac2'

CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on 'rac2'

CRS-2673: Attempting to stop 'ora.CRS.dg' on 'rac2'

CRS-2677: Stop of 'ora.CRS.dg' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.asm' on 'rac2'

CRS-2677: Stop of 'ora.asm' on 'rac2' succeeded

CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'rac2' has completed

CRS-2677: Stop of 'ora.crsd' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.ctssd' on 'rac2'

CRS-2673: Attempting to stop 'ora.evmd' on 'rac2'

CRS-2673: Attempting to stop 'ora.asm' on 'rac2'

CRS-2677: Stop of 'ora.asm' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'rac2'

CRS-2677: Stop of 'ora.evmd' on 'rac2' succeeded

CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'rac2' succeeded

CRS-2677: Stop of 'ora.ctssd' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.cssd' on 'rac2'

CRS-2677: Stop of 'ora.cssd' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.diskmon' on 'rac2'

CRS-2677: Stop of 'ora.diskmon' on 'rac2' succeeded

CRS-4402: The CSS daemon was started in exclusive mode but found an active CSS daemon on node rac1, number 1, and is terminating

Unable to communicate with the Cluster Synchronization Services daemon.

CRS-4000: Command Delete failed, or completed with errors.

crsctl delete for vds in CRS ... failed

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rac2'

CRS-2673: Attempting to stop 'ora.mdnsd' on 'rac2'

CRS-2673: Attempting to stop 'ora.crf' on 'rac2'

CRS-2677: Stop of 'ora.mdnsd' on 'rac2' succeeded

CRS-2677: Stop of 'ora.crf' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.gipcd' on 'rac2'

CRS-2677: Stop of 'ora.gipcd' on 'rac2' succeeded

CRS-2673: Attempting to stop 'ora.gpnpd' on 'rac2'

CRS-2677: Stop of 'ora.gpnpd' on 'rac2' succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rac2' has completed

CRS-4133: Oracle High Availability Services has been stopped.

Successfully deconfigured Oracle clusterware stack on this node

[root@rac2 ~]# /oracle/app/grid/product/11.2.0/root.sh

Running Oracle 11g root script...

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /oracle/app/grid/product/11.2.0

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /oracle/app/grid/product/11.2.0/crs/install/crsconfig_params

LOCAL ADD MODE

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

OLR initialization - successful

Adding daemon to inittab

ACFS-9200: Supported

ACFS-9300: ADVM/ACFS distribution files found.

ACFS-9307: Installing requested ADVM/ACFS software.

ACFS-9308: Loading installed ADVM/ACFS drivers.

ACFS-9321: Creating udev for ADVM/ACFS.

ACFS-9323: Creating module dependencies - this may take some time.

ACFS-9327: Verifying ADVM/ACFS devices.

ACFS-9309: ADVM/ACFS installation correctness verified.

CRS-4402: The CSS daemon was started in exclusive mode but found an active CSS daemon on node rac1, number 1, and is terminating

An active cluster was found during exclusive startup, restarting to join the cluster

Preparing packages for installation...

cvuqdisk-1.0.9-1

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

3. The service ora.rac1.LISTENER_RAC1.lsnr cannot online

NAME=ora.rac1.LISTENER_RAC1.lsnr

TYPE=application

TARGET=ONLINE

STATE=OFFLINE

[grid@rac1 ~]$ crs_start ora.rac1.LISTENER_RAC1.lsnr

CRS-2805: Unable to start 'ora.LISTENER.lsnr' because it has a 'hard' dependency on resource type 'ora.cluster_vip_net1.type' and no resource of that type can satisfy the dependency

CRS-2525: All instances of the resource 'ora.rac2.vip' are already running; relocate is not allowed because the force option was not specified

CRS-0222: Resource 'ora.rac1.LISTENER_RAC1.lsnr' has dependency error.

4. [INS-20802] Oracle Cluster Verification Utility failed.

Cause?燭he plug-in failed in its perform method Action?燫efer to the logs or contact Oracle Support Services. Log File Location

/oracle/app/oraInventory/logs/installActions2011-04-28_05-32-48PM.log

tail -n 6000 /oracle/app/oraInventory/logs/installActions2011-04-28_05-32-48PM.log

INFO: Checking VIP reachability

INFO: Check for VIP reachability passed.

INFO: Post-check for cluster services setup was unsuccessful.

INFO: Checks did not pass for the following node(s):

INFO: rac-cluster,rac1

INFO:

WARNING:

INFO: Completed Plugin named: Oracle Cluster Verification Utility

在<Why ASMLIB and why not?>我們介紹了使用ASMLIB作為一種專門為Oracle Automatic Storage Management特性設計的內核支持庫(kernel support library)的優缺點,同時建議使用成熟的UDEV方案來替代ASMLIB。

這里我們就給出配置UDEV的具體步驟,還是比較簡單的:

1.確認在所有RAC節點上已經安裝了必要的UDEV包

[root@rh3 ~]# rpm -qa|grep udev udev-095-14.21.el5

2.通過scsi_id獲取設備的塊設備的唯一標識名,假設系統上已有LUN sdc-sdp

fori in c d e f g h i j k l m n o p ;

do

echo"sd$i""`scsi_id -g -u -s /block/sd$i` ";

done

sdc 1IET_00010001

sdd 1IET_00010002

sde 1IET_00010003

sdf 1IET_00010004

sdg 1IET_00010005

sdh 1IET_00010006

sdi 1IET_00010007

sdj 1IET_00010008

sdk 1IET_00010009

sdl 1IET_0001000a

sdm 1IET_0001000b

sdn 1IET_0001000c

sdo 1IET_0001000d

sdp 1IET_0001000e

以上列出于塊設備名對應的唯一標識名

3.創建必要的UDEV配置文件,

首先切換到配置文件目錄

[root@rh3 ~]# cd /etc/udev/rules.d

定義必要的規則配置文件

[root@rh3 rules.d]# touch 99-oracle-asmdevices.rules

[root@rh3 rules.d]# cat 99-oracle-asmdevices.rules

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="1IET_00010001", NAME="ocr1", WNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="1IET_00010002", NAME="ocr2", WNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="1IET_00010003", NAME="asm-disk1", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="1IET_00010004", NAME="asm-disk2", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="1IET_00010005", NAME="asm-disk3", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="1IET_00010006", NAME="asm-disk4", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="1IET_00010007", NAME="asm-disk5", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="1IET_00010008", NAME="asm-disk6", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="1IET_00010009", NAME="asm-disk7", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="1IET_0001000a", NAME="asm-disk8", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="1IET_0001000b", NAME="asm-disk9", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="1IET_0001000c", NAME="asm-disk10", WNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="1IET_0001000d", NAME="asm-disk11", WNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -s %p", RESULT=="1IET_0001000e", NAME="asm-disk12", WNER="grid", GROUP="asmadmin", MODE="0660"

Result 為/sbin/scsi_id -g -u -s %p的輸出--Match the returned string of the last PROGRAM call. This key may be used in any following rule after a PROGRAM call.

按順序填入剛才獲取的唯一標識名即可

OWNER為安裝Grid Infrastructure的用戶,在11gr2中一般為grid,GROUP為asmadmin,MODE采用0660即可

NAME為UDEV映射后的設備名,

建議為OCR和VOTE DISK創建獨立的DISKGROUP,為了容易區分將該DISKGROUP專用的設備命名為ocr1..ocrn的形式

其余磁盤可以根據其實際用途或磁盤組名來命名

4.將該規則文件拷貝到其他節點上

[root@rh3 rules.d]# scp 99-oracle-asmdevices.rules Other_node:/etc/udev/rules.d

5.在所有節點上啟動udev服務,或者重啟服務器即可

[root@rh3 rules.d]# /sbin/udevcontrol reload_rules

[root@rh3 rules.d]# /sbin/start_udev Starting udev: [ OK ]

6.檢查設備是否到位

[root@rh3 rules.d]# cd /dev [root@rh3 dev]# ls -l ocr* brw-rw---- 1 grid asmadmin 8, 32 Jul 10 17:31 ocr1 brw-rw---- 1 grid asmadmin 8, 48 Jul 10 17:31 ocr2

[root@rh3 dev]# ls -l asm-disk*

brw-rw---- 1 grid asmadmin 8, 64 Jul 10 17:31 asm-disk1

brw-rw---- 1 grid asmadmin 8, 208 Jul 10 17:31 asm-disk10

brw-rw---- 1 grid asmadmin 8, 224 Jul 10 17:31 asm-disk11

brw-rw---- 1 grid asmadmin 8, 240 Jul 10 17:31 asm-disk12

brw-rw---- 1 grid asmadmin 8, 80 Jul 10 17:31 asm-disk2

brw-rw---- 1 grid asmadmin 8, 96 Jul 10 17:31 asm-disk3

brw-rw---- 1 grid asmadmin 8, 112 Jul 10 17:31 asm-disk4

brw-rw---- 1 grid asmadmin 8, 128 Jul 10 17:31 asm-disk5

brw-rw---- 1 grid asmadmin 8, 144 Jul 10 17:31 asm-disk6

brw-rw---- 1 grid asmadmin 8, 160 Jul 10 17:31 asm-disk7

brw-rw---- 1 grid asmadmin 8, 176 Jul 10 17:31 asm-disk8

brw-rw---- 1 grid asmadmin 8, 192 Jul 10 17:31 asm-disk9

自"手把手教你用VMware在linux下安裝oracle10g RAC"一文發布以來,俺個人的虛榮心再一次得到了極大滿足,因為持續不斷的有朋友對俺持續不斷地表達感謝(就是沒幾個表示請俺吃飯的,5555,這幫口惠心不實的家伙`~~),說俺這篇文檔寫的好是寫的妙,不光步驟清晰又明了,還有圖片做參照。

不過,這其中也有不少朋友與我聯系,說是安裝過程中遇到問題,其中大多數 問題 均是 出在 節點2執行oracleasm listdisks時檢測不到共享的磁盤。

對于vmware下配置RAC數據庫,出現這一問題的原因通常有兩個方面,下面分別描述 。

1、VMWARE中添加磁盤共享參數

使用VMWARE 配置RAC數據庫,一定要選擇server的VMWARE(VMWARE分為server和workstation兩種類型),這一點 三思在文檔中專門強調了,有些朋友可能仍未注意 ;再一個就是 各個 vmware 節點的*.vmx配置文件中,添加相關的磁盤共享參數, 否則也有可能導致 無法正常識別共享的磁盤。

下面舉一下三思配置環境時,vmx文件中關于磁盤共享參數的示例,如下:

disk.locking = "false"

diskLib.dataCacheMaxSize = "0"

diskLib.dataCacheMaxReadAheadSize = "0"

diskLib.DataCacheMinReadAheadSize = "0"

diskLib.dataCachePageSize = "4096"

diskLib.maxUnsyncedWrites = "0"

scsi1.sharedBus = "virtual"

2、共享磁盤不共享

第2個原因就更加BT了,不過這種問題導致磁盤無法共享的例子也不鮮見,出現這種問題 主要是對于 Oracle RAC 架構的理解不深入導致的。

在闡述主題之前,俺首先想明確一個相關概念:究竟何為共享存儲。所謂 共享存儲,顧名思義,也就是 磁盤空間應由相關的節點共享訪問,更直白的講就是節點訪問的是同一個(或幾個)磁盤, 對于虛機 環境 的話,就是訪問相同的磁盤文件。

ORACLE 數據庫是由實例+數據庫組成,實例是由一組操作系統進程+操作系統的一塊內存區域組成;數據庫則是一堆各種類型的特性文件的合集(比如數據文件、臨時文件,重做日志文件、控制文件等),RAC環境的ORACLE數據庫,實際上是多個實例(每個實例分別運行在不同的節點上----一般情況下,你要讓它運行于同一個節點應該也是可行的)訪問和讀寫一份數據庫。數據庫是放在哪呢,就是放在共享存儲上,也就是說RAC的幾個實例 訪問的文件應該在 相 同的磁盤上 。

Ok,回到主題,有些朋友在創建第二個節點,為該節點添加用于voting disk,ocr以asm用的磁盤時,并不是選擇第一個節點中創建的文件 (Use an existing virtual disk) ,而是又重新創建了 新的磁盤文件(Create a new virtual disk), 這種情況完全沒有共享的概念,自然也就不可能實現磁盤的共享存儲了。

在安裝GI,第一個節點的root.sh一般不易出錯,但是第二個節點就遇到了下面的錯誤Start of resource "ora.asm -init" failedFailed to start ASMFailed to start Oracle Clusterware stack

檢查錯誤日志grid@znode2 crsconfig]$ pwd/u01/app/11.2.0/grid/cfgtoollogs/crsconfig[grid@znode2 crsconfig]$ vi + rootcrs_znode2.log

…2012-06-28 17:53:50: Starting CSS in clustered mode2012-06-28 17:54:42: CRS-2672: Attempting to start 'ora.cssdmonitor' on 'znode2′2012-06-28 17:54:42: CRS-2676: Start of 'ora.cssdmonitor' on 'znode2′ succeeded2012-06-28 17:54:42: CRS-2672: Attempting to start 'ora.cssd' on 'znode2′2012-06-28 17:54:42: CRS-2672: Attempting to start 'ora.diskmon' on 'znode2′2012-06-28 17:54:42: CRS-2676: Start of 'ora.diskmon' on 'znode2′ succeeded2012-06-28 17:54:42: CRS-2676: Start of 'ora.cssd' on 'znode2′ succeeded—注意這里等待了10分鐘2012-06-28 18:04:47: Start of resource "ora.ctssd -init -env USR_ORA_ENV=CTSS_REBOOT=TRUE" Succeeded2012-06-28 18:04:47: Command return code of 1 (256) from command: /u01/app/11.2.0/grid/bin/crsctl start resource ora.asm -init2012-06-28 18:04:47: Start of resource "ora.asm -init" failed2012-06-28 18:04:47: Failed to start ASM2012-06-28 18:04:47: Failed to start Oracle Clusterware stack…

/log/znode2/alert/znodename.log

CRS-5818:Aborted command 'start for resource: ora.ctssd 1 1′ for resource 'ora.ctssd'. Details at..

有多種原因可能出現這個問題1, 是public,private網卡配置不正確2,是firewall 未關閉3,hostname 出現在/etc/hosts的127.0.0.1行

我檢查/etc/hosts127.0.0.1 znode1 localhost.localdomain localhost然后把所有節點的127.0.0.1那行改成了127.0.0.1 localhost.localdomain localhost

關掉OUI,deinstall,重裝安裝順利通過

不關oui,只在失敗的節點/app/product/grid/11.2.0/crs/install/roothas.pl -delete -force -verbose重新執行root.sh應該也是可以的。/app/product/grid/11.2.0/root.sh

1. 把第二塊盤設置成scsi1控制,設置硬盤為independent-persistent,在兩個節點都做同樣的添加。

2. 添加或修改如下參數:

scsi1:0.mode = "independent-persistent"scsi1.present = "TRUE"#scsi1.sharedBus = "none"scsi1.virtualDev = "lsilogic"scsi1:0.present = "TRUE"scsi1:0.fileName = "D:\VM RAC\vm_rac1\vm_shared_disk.vmdk"scsi1:0.writeThrough = "TRUE"disk.locking = "FALSE"diskLib.dataCacheMaxSize = "0"diskLib.dataCacheMaxReadAheadSize = "0"diskLib.DataCacheMinReadAheadSize = "0"diskLib.dataCachePageSize = "4096"diskLib.maxUnsyncedWrites = "0"scsi1.sharedBus = "virtual"scsi1.shared = "TRUE"

3. 然后,啟動兩個vm,在第一個節點上,做fdisk共享盤,然后,partprobe 這個設備,在節點一查看下,再節點2再查看下,看看是否同步:

[root@rac1 ~]# fdisk -lDisk /dev/sda: 10.7 GB, 10737418240 bytes255 heads, 63 sectors/track, 1305 cylindersUnits = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System/dev/sda1 * 1 13 104391 83 Linux/dev/sda2 14 1177 9349830 83 Linux/dev/sda3 1178 1305 1028160 82 Linux swap / SolarisDisk /dev/sdb: 5368 MB, 5368709120 bytes255 heads, 63 sectors/track, 652 cylindersUnits = cylinders of 16065 * 512 = 8225280 bytesDisk /dev/sdb doesn't contain a valid partition table

[root@rac1 ~]# fdisk /dev/sdb

。。。。。

。。。。。

Command (m for help): p Disk /dev/sdb: 5368 MB, 5368709120 bytes255 heads, 63 sectors/track, 652 cylindersUnits = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System/dev/sdb1 1 13 104391 83 LinuxCommand (m for help): wThe partition table has been altered!Calling ioctl() to re-read partition table.WARNING: Re-reading the partition table failed with error 16: Device or resource busy.The kernel still uses the old table.The new table will be used at the next reboot.Syncing disks.

[root@rac1 ~]# partprobe /dev/sdb[root@rac1 ~]# fdisk -lDisk /dev/sda: 10.7 GB, 10737418240 bytes255 heads, 63 sectors/track, 1305 cylindersUnits = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System/dev/sda1 * 1 13 104391 83 Linux/dev/sda2 14 1177 9349830 83 Linux/dev/sda3 1178 1305 1028160 82 Linux swap / SolarisDisk /dev/sdb: 5368 MB, 5368709120 bytes255 heads, 63 sectors/track, 652 cylindersUnits = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System/dev/sdb1 1 13 104391 83 Linux

節點2:

[root@rac2 ~]# fdisk -lDisk /dev/sda: 10.7 GB, 10737418240 bytes255 heads, 63 sectors/track, 1305 cylindersUnits = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System/dev/sda1 * 1 13 104391 83 Linux/dev/sda2 14 1177 9349830 83 Linux/dev/sda3 1178 1305 1028160 82 Linux swap / SolarisDisk /dev/sdb: 5368 MB, 5368709120 bytes255 heads, 63 sectors/track, 652 cylindersUnits = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System/dev/sdb1 1 13 104391 83 Linux

在OEL 6.3上搭建一臺11G的RAC測試環境,在最后執行root.sh腳本的時候遇到libcap.so.1: cannot open shared object file: No such file or directory 錯誤。如下所示:[root@rac1 11.2.0]# /g01/oraInventory/orainstRoot.shChanging permissions of /g01/oraInventory.Adding read,write permissions for group.Removing read,write,execute permissions for world.Changing groupname of /g01/oraInventory to oinstall.The execution of the script is complete.[root@rac1 11.2.0]# /g01/app/11.2.0/grid/root.shRunning Oracle 11g root.sh script...The following environment variables are set as: ORACLE_OWNER= grid ORACLE_HOME= /g01/app/11.2.0/gridEnter the full pathname of the local bin directory: [/usr/local/bin]: Copying dbhome to /usr/local/bin ... Copying oraenv to /usr/local/bin ... Copying coraenv to /usr/local/bin ...Creating /etc/oratab file...Entries will be added to the /etc/oratab file as needed byDatabase Configuration Assistant when a database is createdFinished running generic part of root.sh script.Now product-specific root actions will be performed.2013-10-10 03:41:35: Parsing the host name2013-10-10 03:41:35: Checking for super user privileges2013-10-10 03:41:35: User has super user privilegesUsing configuration parameter file: /g01/app/11.2.0/grid/crs/install/crsconfig_paramsCreating trace directory/g01/app/11.2.0/grid/bin/clscfg.bin: error while loading shared libraries: libcap.so.1: cannot open shared object file: No such file or directoryFailed to create keys in the OLR, rc = 127, 32512OLR configuration failed查詢了一下,發現是由于缺少包導致的。在2個節點上重新安裝上此包。[root@rac1 Packages]# rpm -ivh compat-libcap1-1.10-1.x86_64.rpmwarning: compat-libcap1-1.10-1.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEYPreparing... ########################################### [100%] 1:compat-libcap1 ########################################### [100%]刪除以前的CRS配置。[root@rac1 ~]# perl $GRID_HOME/crs/install/rootcrs.pl -verbose -deconfig -force2013-10-10 04:01:41: Parsing the host name2013-10-10 04:01:41: Checking for super user privileges2013-10-10 04:01:41: User has super user privilegesUsing configuration parameter file: /g01/app/11.2.0/grid/crs/install/crsconfig_paramsPRCR-1035 : Failed to look up CRS resource ora.cluster_vip.type for 1PRCR-1068 : Failed to query resourcesCannot communicate with crsdPRCR-1070 : Failed to check if resource ora.gsd is registeredCannot communicate with crsdPRCR-1070 : Failed to check if resource ora.ons is registeredCannot communicate with crsdPRCR-1070 : Failed to check if resource ora.eons is registeredCannot communicate with crsdADVM/ACFS is not supported on oraclelinux-release-6Server-3.0.2.x86_64ACFS-9201: Not SupportedFailure at scls_scr_setval with code 8Internal Error Information: Category: -2 Operation: failed Location: scrsearch4 Other: id doesnt exist scls_scr_setval System Dependent Information: 2CRS-4544: Unable to connect to OHASCRS-4000: Command Stop failed, or completed with errors.error: package cvuqdisk is not installedSuccessfully deconfigured Oracle clusterware stack on this node再次執行root.sh腳本,又遇到了ohasd failed to start at /g01/app/11.2.0/grid/crs/install/rootcrs.pl line 443 錯誤。[root@rac1 ~]# /g01/app/11.2.0/grid/root.shRunning Oracle 11g root.sh script...The following environment variables are set as: ORACLE_OWNER= grid ORACLE_HOME= /g01/app/11.2.0/gridEnter the full pathname of the local bin directory: [/usr/local/bin]: Copying dbhome to /usr/local/bin ... Copying oraenv to /usr/local/bin ... Copying coraenv to /usr/local/bin ...Entries will be added to the /etc/oratab file as needed byDatabase Configuration Assistant when a database is createdFinished running generic part of root.sh script.Now product-specific root actions will be performed.2013-10-10 04:02:57: Parsing the host name2013-10-10 04:02:57: Checking for super user privileges2013-10-10 04:02:57: User has super user privilegesUsing configuration parameter file: /g01/app/11.2.0/grid/crs/install/crsconfig_paramsLOCAL ADD MODE Creating OCR keys for user 'root', privgrp 'root'..Operation successful. root wallet root wallet cert root cert export peer wallet profile reader wallet pa wallet peer wallet keys pa wallet keys peer cert request pa cert request peer cert pa cert peer root cert TP profile reader root cert TP pa root cert TP peer pa cert TP pa peer cert TP profile reader pa cert TP profile reader peer cert TP peer user cert pa user certAdding daemon to inittabCRS-4124: Oracle High Availability Services startup failed.CRS-4000: Command Start failed, or completed with errors.ohasd failed to start: Inappropriate ioctl for deviceohasd failed to start at /g01/app/11.2.0/grid/crs/install/rootcrs.pl line 443.網上搜了一下,竟然是ORACLE 的BUG。解決方法竟然是出現pa user cert的時候在另一個窗口不停的執行下面的命令,直到命令執行成功,真是變態啊。/bin/dd if=/var/tmp/.oracle/npohasd of=/dev/null bs=1024 count=1具體可以參考:https://forums.oracle.com/thread/2352285

在linux下安裝Oracle 11gR2 的grid infrastructure時,root.sh執行完后,最后一步校驗時出現

下列錯"oracle cluster utility failed"。

之前所有過程都是好的。

檢查crs_stat -t,發現gsd資源是目標和狀態值都是offline。

Oracle官方資料解釋說。這個GSD是用在9.2庫上的,如果沒有9.2的庫,該服務是可以OFFLINE的。

5.3.4 Enabling The Global Services Daemon (GSD) for Oracle Database Release 9.2By default, the Global Services daemon (GSD) is disabled. If you install Oracle Database 9i release 2 (9.2) on Oracle Grid Infrastructure for a Cluster 11g release 2 (11.2), then you must enable the GSD. Use the following commands to enable the GSD before you install Oracle Database release 9.2:

srvctl enable nodeapps -gsrvctl start nodeapps

因此,這個問題可以不考慮。

在安裝日志文件中發現NTPD的錯誤信息,回憶起之前NTPD后臺服務我沒有啟動,忽略錯誤過去的。

因此,手工啟動NTPD服務。

[bash]# chkconfig --level 2345 ntpd on [bash]# /etc/init.d/ntpd restart

再重新安裝一次,這次一切正常。

11g安裝過程中,所有的校驗項都是成功的最好。

后記:

其實,可以忽略該錯誤的。NTPD可以不用。不就是一個時間同步嘛。

gsd的資源offline也沒關系,初始設置的目標就是offline,在11g中也用不上了。

原因說是在hosts文件里指定了scan ip

日志中也報錯:

INFO: Checking Single Client Access Name (SCAN)...INFO: Checking name resolution setup for "rac-scan"...INFO: ERROR: INFO: PRVF-4664 : Found inconsistent name resolution entries for SCAN name "rac-scan"INFO: ERROR: INFO: PRVF-4657 : Name resolution setup check for "rac-scan" (IP address: 192.168.0.20) failedINFO: ERROR: INFO: PRVF-4664 : Found inconsistent name resolution entries for SCAN name "rac-scan"INFO: Verification of SCAN VIP and Listener setup failed

搜了一下發現老楊(yangtingkun)的文章中也提到了這個錯誤:

F:RHEL5.532oracle_patchyangtingkun 安裝Oracle11_2 RAC for Solaris10 sparc64(二).mht

老楊在文章的最后提到:

導致這個錯誤的原因是在/etc/hosts中配置了SCAN的地址,嘗試ping這個地址信息,如果可以成功,則這個錯誤可以忽略。

我嘗試ping scan ip可以ping通,所以暫時也就忽略了這個錯誤。

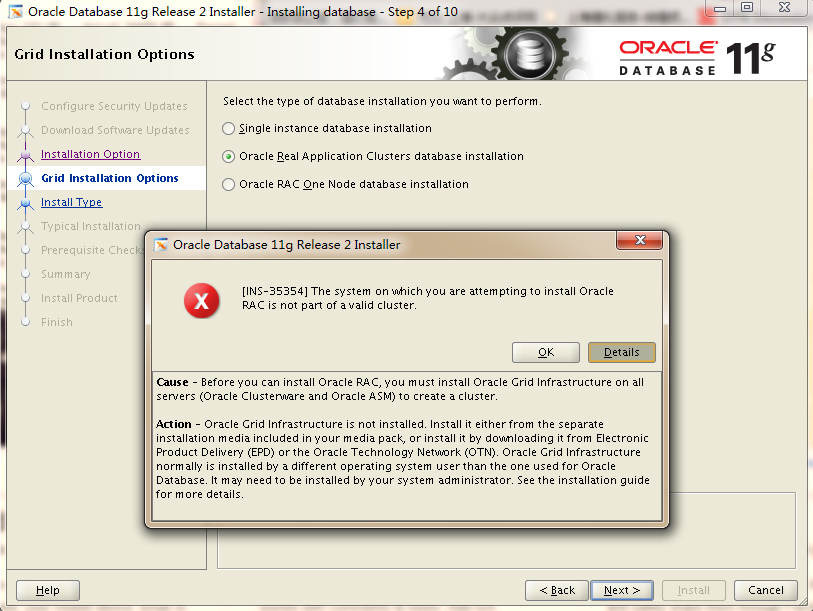

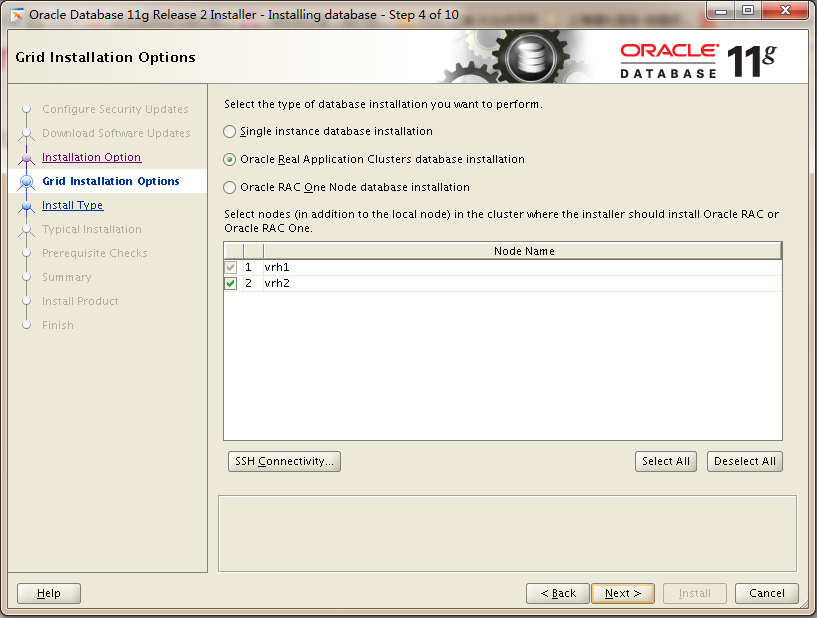

今天在安裝一套11.2.0.2 RAC數據庫時出現了INS-35354的問題:

因為之前已經成功安裝了11.2.0.2的GI,而且Cluster的一切狀態都正常,出現這錯誤都少有點意外:

[grid@vrh2 ~]$ crsctl check crs

CRS-4638: Oracle High Availability Services is online

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

去MOS搜了一圈,發現有可能是oraInventory中的inventory.xml更新不正確導致的:

Applies to:

Oracle Server - Enterprise Edition - Version: 11.2.0.1 to 11.2.0.2 - Release: 11.2 to 11.2

Information in this document applies to any platform.

Symptoms

Installing 11gR2 database software in a Grid Infrastrsucture environment fails with the error INS-35354:

The system on which you are attempting to install Oracle RAC is not part of a valid cluster.

Grid Infrastructure (Oracle Clusterware) is running on all nodes in the cluster which can be verified with:

crsctl check crs

Changes

This is a new install.

Cause

As per 11gR2 documentation the error description is:

INS-35354: The system on which you are attempting to install Oracle RAC is not part of a valid cluster.

Cause: Prior to installing Oracle RAC, you must create a valid cluster.

This is done by deploying Grid Infrastructure software,

which will allow configuration of Oracle Clusterware and Automatic Storage Management.

However, the problem at hand may be that the central inventory is missing the "CRS=true" flag

(for the Grid Infrastructure Home).

<inventory.xml>

-------------

<HOME_LIST>

<HOME NAME="Ora11g_gridinfrahome1" LOC="/u01/grid" TYPE="O" IDX="1">

<NODE_LIST>

<NODE NAME="node1"/>

<NODE NAME="node2"/>

</NODE_LIST>

-------------

From the inventory.xml, we see that the HOME NAME line is missing the CRS="true" flag.

The error INS-35354 will occur when the central inventory entry for the Grid Infrastructure

home is missing the flag that identifies it as CRS-type home.

Solution

Use the -updateNodeList option for the installer command to fix the the inventory.

The full syntax is:

./runInstaller -updateNodeList "CLUSTER_NODES={node1,node2}"

ORACLE_HOME="" ORACLE_HOME_NAME="" LOCAL_NODE="Node_Name" CRS=[true|false]

Execute the command on any node in the cluster.

Examples:

For a two-node RAC cluster on UNIX:

Node1:

cd /u01/grid/oui/bin

./runInstaller -updateNodeList "CLUSTER_NODES={node1,node2}" ORACLE_HOME="/u01/crs"

ORACLE_HOME_NAME="GI_11201" LOCAL_NODE="node1" CRS=true

For a 2-node RAC cluster on Windows:

Node 1:

cd e:\app\11.2.0\grid\oui\bin

e:\app\11.2.0\grid\oui\bin\setup -updateNodeList "CLUSTER_NODES={RACNODE1,RACNODE2}"

ORACLE_HOME="e:\app\11.2.0\grid" ORACLE_HOME_NAME="OraCrs11g_home1" LOCAL_NODE="RACNODE1" CRS=true

我環境中的inventory.xml內容如下:

[grid@vrh2 ContentsXML]$ cat inventory.xml

<?xml version="1.0" standalone="yes" ?>

<!-- Copyright (c) 1999, 2010, Oracle. All rights reserved. -->

<!-- Do not modify the contents of this file by hand. -->

<INVENTORY>

<VERSION_INFO>

<SAVED_WITH>11.2.0.2.0</SAVED_WITH>

<MINIMUM_VER>2.1.0.6.0</MINIMUM_VER>

</VERSION_INFO>

<HOME_LIST>

<HOME NAME="Ora11g_gridinfrahome1" LOC="/g01/11.2.0/grid" TYPE="O" IDX="1" >

<NODE_LIST>

<NODE NAME="vrh2"/>

<NODE NAME="vrh3"/>

</NODE_LIST>

</HOME>

</HOME_LIST>

</INVENTORY>

顯然是在<HOME NAME這里缺少了CRS="true"的標志,導致OUI安裝界面在檢測時認為該節點沒有安裝GI。

解決方案其實很簡單只要加入CRS="true"在重啟runInstaller即可,不需要如文檔中介紹的那樣使用runInstaller -updateNodeList的復雜命令組合。

[grid@vrh2 ContentsXML]$ cat /g01/oraInventory/ContentsXML/inventory.xml

<?xml version="1.0" standalone="yes" ?>

<!-- Copyright (c) 1999, 2010, Oracle. All rights reserved. -->

<!-- Do not modify the contents of this file by hand. -->

<INVENTORY>

<VERSION_INFO>

<SAVED_WITH>11.2.0.2.0</SAVED_WITH>

<MINIMUM_VER>2.1.0.6.0</MINIMUM_VER>

</VERSION_INFO>

<HOME_LIST>

<HOME NAME="Ora11g_gridinfrahome1" LOC="/g01/11.2.0/grid" TYPE="O" IDX="1" CRS="true">

<NODE_LIST>

<NODE NAME="vrh2"/>

<NODE NAME="vrh3"/>

</NODE_LIST>

</HOME>

</HOME_LIST>

</INVENTORY>

如上修改后問題解決,安裝界面正常:

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。