您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

這篇文章將為大家詳細講解有關tensorflow如何獲取變量&打印權值,小編覺得挺實用的,因此分享給大家做個參考,希望大家閱讀完這篇文章后可以有所收獲。

在使用tensorflow中,我們常常需要獲取某個變量的值,比如:打印某一層的權重,通常我們可以直接利用變量的name屬性來獲取,但是當我們利用一些第三方的庫來構造神經網絡的layer時,存在一種情況:就是我們自己無法定義該層的變量,因為是自動進行定義的。

比如用tensorflow的slim庫時:

<span >def resnet_stack(images, output_shape, hparams, scope=None):</span>

<span > """Create a resnet style transfer block.</span>

<span ></span>

<span > Args:</span>

<span > images: [batch-size, height, width, channels] image tensor to feed as input</span>

<span > output_shape: output image shape in form [height, width, channels]</span>

<span > hparams: hparams objects</span>

<span > scope: Variable scope</span>

<span ></span>

<span > Returns:</span>

<span > Images after processing with resnet blocks.</span>

<span > """</span>

<span > end_points = {}</span>

<span > if hparams.noise_channel:</span>

<span > # separate the noise for visualization</span>

<span > end_points['noise'] = images[:, :, :, -1]</span>

<span > assert images.shape.as_list()[1:3] == output_shape[0:2]</span>

<span ></span>

<span > with tf.variable_scope(scope, 'resnet_style_transfer', [images]):</span>

<span > with slim.arg_scope(</span>

<span > [slim.conv2d],</span>

<span > normalizer_fn=slim.batch_norm,</span>

<span > kernel_size=[hparams.generator_kernel_size] * 2,</span>

<span > stride=1):</span>

<span > net = slim.conv2d(</span>

<span > images,</span>

<span > hparams.resnet_filters,</span>

<span > normalizer_fn=None,</span>

<span > activation_fn=tf.nn.relu)</span>

<span > for block in range(hparams.resnet_blocks):</span>

<span > net = resnet_block(net, hparams)</span>

<span > end_points['resnet_block_{}'.format(block)] = net</span>

<span ></span>

<span > net = slim.conv2d(</span>

<span > net,</span>

<span > output_shape[-1],</span>

<span > kernel_size=[1, 1],</span>

<span > normalizer_fn=None,</span>

<span > activation_fn=tf.nn.tanh,</span>

<span > scope='conv_out')</span>

<span > end_points['transferred_images'] = net</span>

<span > return net, end_points</span>我們希望獲取第一個卷積層的權重weight,該怎么辦呢??

在訓練時,這些可訓練的變量會被tensorflow保存在 tf.trainable_variables() 中,于是我們就可以通過打印 tf.trainable_variables() 來獲取該卷積層的名稱(或者你也可以自己根據scope來看出來該變量的name ),然后利用tf.get_default_grap().get_tensor_by_name 來獲取該變量。

舉個簡單的例子:

<span >import tensorflow as tf</span>

<span >with tf.variable_scope("generate"):</span>

<span > with tf.variable_scope("resnet_stack"):</span>

<span > #簡單起見,這里沒有用第三方庫來說明,</span>

<span > bias = tf.Variable(0.0,name="bias")</span>

<span > weight = tf.Variable(0.0,name="weight")</span>

<span ></span>

<span >for tv in tf.trainable_variables():</span>

<span > print (tv.name)</span>

<span ></span>

<span >b = tf.get_default_graph().get_tensor_by_name("generate/resnet_stack/bias:0")</span>

<span >w = tf.get_default_graph().get_tensor_by_name("generate/resnet_stack/weight:0")</span>

<span ></span>

<span >with tf.Session() as sess:</span>

<span > tf.global_variables_initializer().run()</span>

<span > print(sess.run(b))</span>

<span > print(sess.run(w))

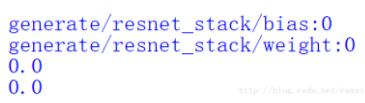

</span>結果如下:

關于“tensorflow如何獲取變量&打印權值”這篇文章就分享到這里了,希望以上內容可以對大家有一定的幫助,使各位可以學到更多知識,如果覺得文章不錯,請把它分享出去讓更多的人看到。

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。