您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

本篇文章為大家展示了如何在CentOS7.3下二進制安裝Kubernetes 1.16.0高可用集群,內容簡明扼要并且容易理解,絕對能使你眼前一亮,通過這篇文章的詳細介紹希望你能有所收獲。

服務器規劃:

至少三臺服務器組建高可用集群,配置2C4G以上

master,node節點均已安裝docker,版本18以上

VIP 172.30.2.60

172.30.0.109 k8s-master1 nginx keepalived

172.30.0.89 k8s-master2 nginx keepalived

172.30.0.81 k8s-node1

二進制Kubernetes安裝路徑:/opt/kubernetes/{ssl,cfg,bin,logs}分別存放密鑰,配置,可執行,日志文件

二進制etcd安裝路徑:/opt/etcd/{ssl,cfg,bin}分別存放密鑰,配置,可執行文件

1、系統初始化

1.關閉防火墻:

# systemctl stop firewalld

# systemctl disable firewalld

2.關閉selinux:

# setenforce 0 # 臨時

# sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久

3.關閉swap:

# swapoff -a # 臨時

# vim /etc/fstab # 永久

4.同步系統時間:

# ntpdate time.windows.com

5.添加hosts:

# vim /etc/hosts

172.30.0.109 k8s-master1

172.30.0.81 k8s-master2

172.30.0.89 k8s-node1

6.修改主機名:

hostnamectl set-hostname k8s-master1

2、Etcd集群

1.安裝cfssl工具

# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

# wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

# chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

# mv cfssl_linux-amd64 /usr/local/bin/cfssl

# mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

# mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

2.生成etcd證書

①生成server.csr.json 修改請求文件中hosts字段包含所有etcd節點IP:

# vi server-csr.json

{

"CN": "etcd",

"hosts": [

"etcd01節點IP",

"etcd02節點IP",

"etcd03節點IP"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

②生成自定義CA,初始化配置文件

# vim ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

# vim ca-csr.json

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

etcd密鑰初始化文件準備好后,即可生成密鑰

# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

生成的密鑰放置在指定的etcd/ssl目錄下

etcd二進制可執行文件etcd,etcdctl放置在etcd/bin下

etcd配置文件放置在etcd/cfg下,配置文件如下

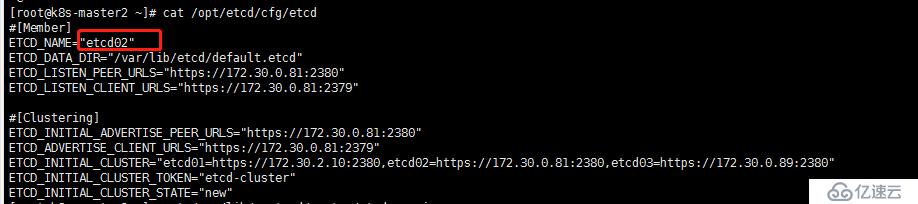

# cat /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd02"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.30.0.81:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.30.0.81:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.30.0.81:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://172.30.0.81:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://172.30.2.10:2380,etcd02=https://172.30.0.81:2380,etcd03=https://172.30.0.89:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

將etcd.service放置在/usr/lib/systemd/system/下

# cat /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-/opt/etcd/cfg/etcd

ExecStart=/opt/etcd/bin/etcd \

--name=${ETCD_NAME} \

--data-dir=${ETCD_DATA_DIR} \

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=${ETCD_INITIAL_CLUSTER} \

--initial-cluster-state=new \

--cert-file=/opt/etcd/ssl/server.pem \

--key-file=/opt/etcd/ssl/server-key.pem \

--peer-cert-file=/opt/etcd/ssl/server.pem \

--peer-key-file=/opt/etcd/ssl/server-key.pem \

--trusted-ca-file=/opt/etcd/ssl/ca.pem \

--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

分別拷貝到Etcd三個節點:

# scp –r /opt/etcd root@etcd節點IP:/opt

# scp /usr/lib/systemd/system/etcd.service root@etcd節點IP:/usr/lib/systemd/system

同步修改etcd節點配置文件的配置名稱,使其與主機相對應,如

啟動etcd節點,etcd安裝完成

# systemctl start etcd && systemctl enable etcd

檢查etcd節點健康狀態

# /opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem -- endpoints="https://172.30.2.10:2379,https://172.30.0.81:2379,https://172.30.0.89:2379" cluster-health

member 37f20611ff3d9209 is healthy: got healthy result from https://172.30.2.10:2379

member b10f0bac3883a232 is healthy: got healthy result from https://172.30.0.81:2379

member b46624837acedac9 is healthy: got healthy result from https://172.30.0.89:2379

cluster is healthy

3、部署K8S集群——master節點

①重新生成自定義CA,與etcd進行區分,生成apiserver,kube-proxy,kubectl-admin證書

# vim ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

# vim ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

②生成kube-proxy密鑰初始化文件

cat kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

③生成apiserver密鑰初始化文件,注意需要寫入所有master節點IP,以及需要訪問apiserver的IP地址,包括nginx,nginx vip,否則需要重新生成密鑰

# vim server-csr.json

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"172.30.2.60",

"172.30.0.109",

"172.30.0.81",

"172.30.2.10",

"172.30.0.89"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

④admin密鑰,供kubectl遠程客戶端運行

# vim admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

⑤生成密鑰

# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

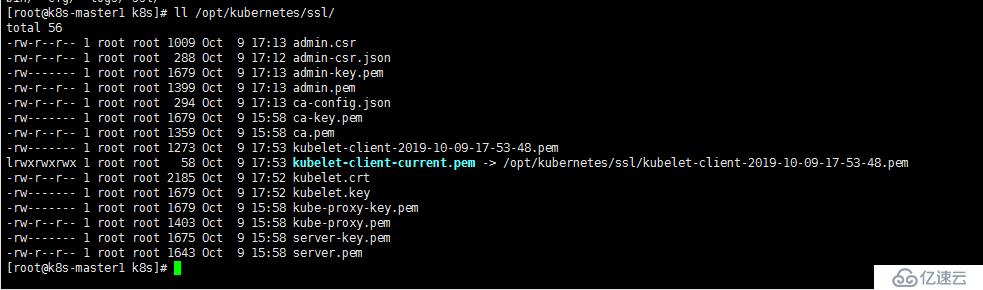

同步*.pem密鑰至所有節點的/opt/kubernetes/ssl(也可以針對性同步密鑰),kubelet密鑰是后面部署kubelet自動生成的

生成admin的kubeconfig文件,用于客戶端訪問k8s集群

# kubectl config set-cluster kubernetes --certificate-authority=/opt/kubernetes/ssl/ca.pem --embed-certs=true --server=https://172.30.0.109:6443 --kubeconfig=/root/.kube/kubectl.kubeconfig

# kubectl config set-credentials kube-admin --client-certificate=/opt/kubernetes/ssl/admin.pem --client-key=/opt/kubernetes/ssl/admin-key.pem --embed-certs=true --kubeconfig=/root/.kube/kubectl.kubeconfig

# kubectl config set-context kube-admin@kubernetes --cluster=kubernetes --user=kube-admin --kubeconfig=/root/.kube/kubectl.kubeconfig

# kubectl config use-context kube-admin@kubernetes --kubeconfig=/root/.kube/kubectl.kubeconfig

# mv /root/.kube/{kubectl.kubeconfig,config}

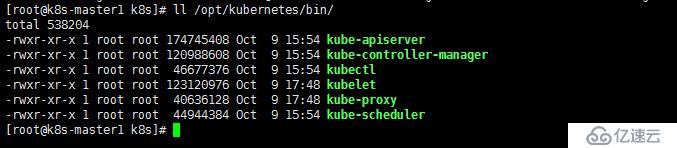

⑥下載master二進制包放置在/opt/kubernetes/bin下

⑦配置配置文件,service啟動文件

kube-apiserve 注意:部署另一臺master時,需要更換IP地址

# vim /opt/kubernetes/cfg/kube-apiserver.conf

KUBE_APISERVER_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/opt/kubernetes/logs \

--etcd-servers=https://172.30.2.10:2379,https://172.30.0.81:2379,https://172.30.0.89:2379 \

--bind-address=172.30.0.109 \

--secure-port=6443 \

--advertise-address=172.30.0.109 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth=true \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=30000-32767 \

--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \

--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \

--tls-cert-file=/opt/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"

# cat /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.conf

ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

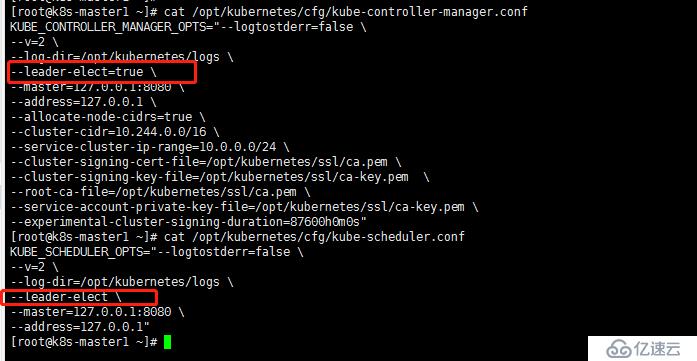

kube-controller-manager

# vim /opt/kubernetes/cfg/kube-controller-manager.conf

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/opt/kubernetes/logs \

--leader-elect=true \

--master=127.0.0.1:8080 \

--address=127.0.0.1 \

--allocate-node-cidrs=true \

--cluster-cidr=10.244.0.0/16 \

--service-cluster-ip-range=10.0.0.0/24 \

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \

--root-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \

--experimental-cluster-signing-duration=87600h0m0s"

# vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.conf

ExecStart=/opt/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

kube-scheduler

# vim /opt/kubernetes/cfg/kube-scheduler.conf

KUBE_SCHEDULER_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/opt/kubernetes/logs \

--leader-elect \

--master=127.0.0.1:8080 \

--address=127.0.0.1"

# vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.conf

ExecStart=/opt/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

生成token文件,供node節點與apiserver進行通信,注意token值,master需要與node保持一致

# cat /opt/kubernetes/cfg/token.csv

c47ffb939f5ca36231d9e3121a252940,kubelet-bootstrap,10001,"system:node-bootstrapper"

格式:token,用戶,uid,用戶組

token也可自行生成替換:

# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

授權kubelet-bootstrap,使node節點kubelet能夠正常訪問到apiserver

# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

啟動master節點,觀察日志/opt/kubernetes/logs

# systemctl start kube-controller-manager

# systemctl start kube-scheduler

# systemctl enable kube-apiserver

# systemctl enable kube-controller-manager

# systemctl enable kube-scheduler

原樣部署第二個master節點172.30.0.81,不同之處僅在于apiserver配置文件當中的IP地址

4、部署K8S集群——node節點

①node節點密鑰準備

已提前同步至node節點的/opt/kubernetes/ssl下

②配置node節點kubelet,kube-proxy配置文件

kubelet 注意:不同節點的kubelet需要修改hostname配置

# vim kubelet.conf

KUBELET_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/opt/kubernetes/logs \

--hostname-override=k8s-node1 \

--network-plugin=cni \

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \

--config=/opt/kubernetes/cfg/kubelet-config.yml \

--cert-dir=/opt/kubernetes/ssl \

--pod-infra-container-image=lizhenliang/pause-amd64:3.0"

bootstrap.kubeconfig 這個文件是kubelet與apiserver的通信認證文件,內部token需要與master節點的token文件保持一致

#vim bootstrap.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority: /opt/kubernetes/ssl/ca.pem

server: https://172.30.0.109:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubelet-bootstrap

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: kubelet-bootstrap

user:

token: c47ffb939f5ca36231d9e3121a252940

# vim kubelet-config.yml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

# vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Before=docker.service

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kubelet.conf

ExecStart=/opt/kubernetes/bin/kubelet $KUBELET_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

kube-proxy

# vim kube-proxy.conf

KUBE_PROXY_OPTS="--logtostderr=false \

--v=2 \

--log-dir=/opt/kubernetes/logs \

--config=/opt/kubernetes/cfg/kube-proxy-config.yml"

# vim kube-proxy-config.yml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

address: 0.0.0.0

metricsBindAddress: 0.0.0.0:10249

clientConnection:

kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfig

hostnameOverride: k8s-node1

clusterCIDR: 10.0.0.0/24

mode: ipvs

ipvs:

scheduler: "rr"

iptables:

masqueradeAll: true

kube-proxy.kubeconfig kube-proxy通信認證文件

# vim kube-proxy.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority: /opt/kubernetes/ssl/ca.pem

server: https://172.30.0.109:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kube-proxy

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: kube-proxy

user:

client-certificate: /opt/kubernetes/ssl/kube-proxy.pem

client-key: /opt/kubernetes/ssl/kube-proxy-key.pem

# vim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.conf

ExecStart=/opt/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

/opt/kubernetes/cfg配置如下kubelet.kubeconfig是啟動后自動生成的

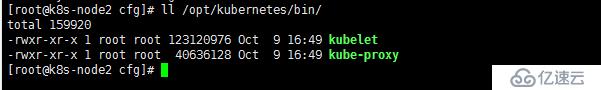

/opt/kubernetes/bin

③啟動node節點

# systemctl start kubelet

# systemctl start kube-proxy

# systemctl enable kubelet

# systemctl enable kube-proxy

④允許給node頒發證書

# kubectl get csr

# kubectl certificate approve node-csr-MYUxbmf_nmPQjmH3LkbZRL2uTO-_FCzDQUoUfTy7YjI

# kubectl get node

5、部署CNI網絡

二進制包下載地址:https://github.com/containernetworking/plugins/releases

# mkdir /opt/cni/bin /etc/cni/net.d

# tar zxvf cni-plugins-linux-amd64-v0.8.2.tgz –C /opt/cni/bin

確保kubelet啟用CNI:

# cat /opt/kubernetes/cfg/kubelet.conf

--network-plugin=cni

在Master執行:

# kubectl apply -f kube-flannel.yaml

# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

kube-flannel-ds-amd64-5xmhh 1/1 Running 6 171m

kube-flannel-ds-amd64-ps5fx 1/1 Running 0 150m

6、授權apiserver訪問kubelet

為提供安全性,kubelet禁止匿名訪問,必須授權才可以。

# vim apiserver-to-kubelet-rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

- pods/log

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

# kubectl apply -f apiserver-to-kubelet-rbac.yaml

這樣就能通過kubectl操作pod,查看日志

7、部署coredns

# kubectl apply –f coredns.yaml

8、K8S高可用配置

kube-controller-manager以及kube-scheduler高可用已經在部署集群的時候在配置中體現

選舉進行負載均衡,所以我們只需要關注apiserver高可用即可

kube-apiserver高可用

①首先配置好兩臺master節點

②在兩臺master節點上各自部署nginx,keepalived

keepalived監聽nginx健康狀態

nginx配置為四層監聽兩臺master的6443端口,四層轉發

nginx配置如下

# rpm -vih http://nginx.org/packages/rhel/7/x86_64/RPMS/nginx-1.16.0-1.el7.ngx.x86_64.rpm

# vim /etc/nginx/nginx.conf

……

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 172.30.0.109:6443;

server 172.30.0.81:6443;

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

……

啟動nginx

# systemctl start nginx

# systemctl enable nginx

keepliaved vip配置為172.30.2.60

③配置node節點訪問master apiserver經由172.30.2.60進行四層轉發

修改node節點配置,將單點的master的節點,更改為VIP 172.30.2.60即可

批量修改:

# sed -i 's#172.30.0.109#172.30.2.60#g' *

上述內容就是如何在CentOS7.3下二進制安裝Kubernetes 1.16.0高可用集群,你們學到知識或技能了嗎?如果還想學到更多技能或者豐富自己的知識儲備,歡迎關注億速云行業資訊頻道。

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。