您好,登錄后才能下訂單哦!

您好,登錄后才能下訂單哦!

基礎環境:Centos7.2

192.168.200.126 ceph2

192.168.200.127 ceph3

192.168.200.129 ceph4

關閉防火墻和selinux

# setenforce 0

# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/confi

# systemctl stop firewalld

# systemctl disable firewalld

ceph Yum源:

[root@ceph2 ~]# cat /etc/yum.repos.d/ceph.repo

[Ceph-mimic]

name=Ceph x86_64 packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/x86_64/

enabled=1

gpgcheck=0

ceph-deploy Yum源

[root@ceph2 ~]# cat /etc/yum.repos.d/ceph-deploy.repo

[ceph-deploy]

name=ceph-deploy

baseurl=https://download.ceph.com/rpm-mimic/el7/noarch/

enabled=1

gpgcheck=0

所有節點安裝ntp后進行時間同步

# yum install -y ntp

# ntpdate pool.ntp.org

集群免密鑰配置

[root@ceph2 ~]# ssh-keygen

[root@ceph2 ~]# ssh-copy-id ceph2

[root@ceph2 ~]# ssh-copy-id ceph3

[root@ceph2 ~]# ssh-copy-id ceph4

同步配置

[root@ceph2 ~]# scp /etc/hosts ceph3:/etc/hosts

[root@ceph2 ~]# scp /etc/hosts ceph4:/etc/hosts

[root@ceph2 ~]# scp /etc/yum.repos.d/ceph-deploy.repo ceph3:/etc/yum.repos.d/

[root@ceph2 ~]# scp /etc/yum.repos.d/ceph-deploy.repo ceph4:/etc/yum.repos.d/

部署ceph

[root@ceph2 ~]# mkdir /etc/ceph

[root@ceph2 ~]# yum install -y ceph-deploy python-pip

[root@ceph2 ceph]# ceph-deploy new ceph2 ceph3 ceph4

[root@ceph2 ceph]# ls

ceph.conf? ceph-deploy-ceph.log? ceph.mon.keyring

[root@ceph2 ceph]# vi ceph.conf

[global]

fsid = d5dec480-a9df-4833-b740-de3a0ae4c755

mon_initial_members = ceph2, ceph3, ceph4

mon_host = 192.168.200.126,192.168.200.127,192.168.200.129

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

public network = 192.168.200.0/24

cluster network = 192.168.200.0/24

所有節點安裝ceph組件:

yum install -y ceph

ceph2節點 初始monitor 并收集密鑰

[root@ceph2 ceph]# ceph-deploy? mon create-initial

分發密鑰給其他節點

[root@ceph2 ceph]# ceph-deploy admin ceph{1..3}

配置OSD

[root@ceph2 ceph]# ceph-deploy osd create --data /dev/sdb ceph2

[root@ceph2 ceph]# ceph-deploy osd create --data /dev/sdb ceph3

[root@ceph2 ceph]# ceph-deploy osd create --data /dev/sdb ceph4

[root@ceph2 ceph]# ceph -s

? cluster:

??? id:???? d5dec480-a9df-4833-b740-de3a0ae4c755

??? health: HEALTH_WARN

??????????? no active mgr

?

? services:

??? mon: 3 daemons, quorum ceph2,ceph3,ceph4

??? mgr: no daemons active

??? osd: 3 osds: 3 up, 3 in

?

? data:

??? pools:?? 0 pools, 0 pgs

??? objects: 0? objects, 0 B

??? usage:?? 0 B used, 0 B / 0 B avail

??? pgs:

?

如果出現以下警告,說明是集群內主機未同步:同步時間即可

??? health: HEALTH_WARN

??????????? clock skew detected on mon.ceph3

[root@ceph3 ~]# ntpdate pool.ntp.org

[root@ceph2 ceph]# systemctl restart ceph-mon.target

[root@ceph2 ceph]# ceph -s

? cluster:

??? id:???? d5dec480-a9df-4833-b740-de3a0ae4c755

??? health: HEALTH_OK

?

? services:

??? mon: 3 daemons, quorum ceph2,ceph3,ceph4

??? mgr: ceph2(active), standbys: ceph4, ceph3

??? osd: 3 osds: 3 up, 3 in

?

? data:

??? pools:?? 0 pools, 0 pgs

??? objects: 0? objects, 0 B

??? usage:?? 3.0 GiB used, 57 GiB / 60 GiB avail

??? pgs:

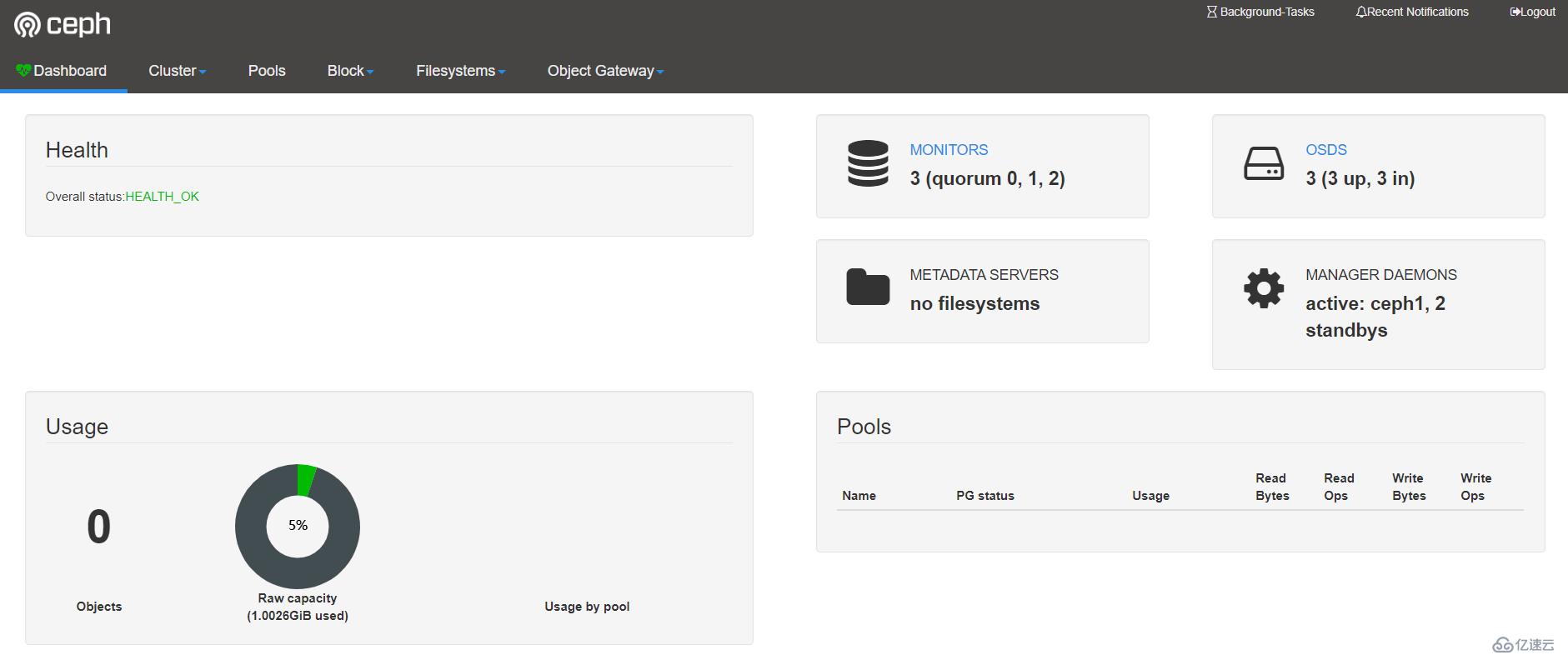

開啟dashboard,web管理

[root@ceph2 ceph]# vi /etc/ceph/ceph.conf

# 添加

[mgr]

mgr_modules = dashboard

[root@ceph2 ceph]# ceph mgr module enable dashboard

[root@ceph2 ceph]# ceph-deploy mgr create ceph2?? ??? ??? ??? ????

生成并安裝一個 自簽名證書

[root@ceph2 ceph]# ceph dashboard create-self-signed-cert

生成密鑰,生成兩個文件dashboard.crt? dashboard.key

[root@ceph2 ceph]#openssl req -new -nodes -x509?? -subj "/O=IT/CN=ceph-mgr-dashboard" -days 3650?? -keyout dashboard.key -out dashboard.crt -extensions v3_ca

配置服務地址、端口,默認的端口是8443,這里改為7000

[root@ceph2 ceph]# ceph config set mgr mgr/dashboard/server_addr 192.168.200.126

[root@ceph2 ceph]# ceph config set mgr mgr/dashboard/server_port 7000

[root@ceph2 ceph]# ceph dashboard set-login-credentials admin admin

[root@ceph2 ceph]# systemctl restart ceph-mgr@ceph2.service

[root@ceph2 ceph]# ceph mgr services

{

??? "dashboard": "https://192.168.200.126:7000/"

}

同步集群ceph配置文件

[root@ceph2 ceph]# ceph-deploy --overwrite-conf config push ceph3

[root@ceph2 ceph]# ceph-deploy --overwrite-conf config push ceph4

https://192.168.200.126:7000/#/login

塊存儲的使用

[root@ceph4 ceph]# ceph osd pool create rbd 128??

[root@ceph4 ceph]# ceph osd pool get rbd pg_num

pg_num: 128

[root@ceph4 ceph]# ceph auth add client.rbd mon 'allow r' osd 'allow rwx pool=rbd'

[root@ceph4 ceph]# ceph auth export client.rbd -o ceph.client.rbd.keyring

[root@ceph4 ceph]# rbd create rbd1 --size 1024 --name client.rbd

[root@ceph4 ceph]# rbd ls -p rbd --name client.rbd

rbd1

[root@ceph4 ceph]# rbd --image rbd1 info --name client.rbd ?

rbd image 'rbd1':

??????? size 1 GiB in 256 objects

??????? order 22 (4 MiB objects)

??????? id: 85d36b8b4567

??????? block_name_prefix: rbd_data.85d36b8b4567

??????? format: 2

??????? features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

??????? op_features:

??????? flags:

??????? create_timestamp: Sun Nov 17 04:33:17 2019

?? ??? ?

place group(pg) 為存儲對象數量?? 一個磁盤為1個OSD,本次是三個sdb所以小于5個為128

[root@ceph4 ceph]# rbd feature disable rbd1 exclusive-lock object-map deep-flatten fast-diff --name client.rbd ?

[root@ceph4 ceph]# rbd map --image rbd1 --name client.rbd

/dev/rbd0

[root@ceph4 ceph]# rbd showmapped --name client.rbd

id pool image snap device?? ?

0? rbd? rbd1? -??? /dev/rbd0

[root@ceph4 ceph]# mkfs.xfs /dev/rbd0

meta-data=/dev/rbd0????????????? isize=256??? agcount=8, agsize=32752 blks

???????? =?????????????????????? sectsz=512?? attr=2, projid32bit=1

???????? =?????????????????????? crc=0??????? finobt=0

data???? =?????????????????????? bsize=4096?? blocks=262016, imaxpct=25

???????? =?????????????????????? sunit=16???? swidth=16 blks

naming?? =version 2????????????? bsize=4096?? ascii-ci=0 ftype=0

log????? =internal log?????????? bsize=4096?? blocks=768, version=2

???????? =?????????????????????? sectsz=512?? sunit=16 blks, lazy-count=1

realtime =none?????????????????? extsz=4096?? blocks=0, rtextents=0

[root@ceph4 ceph]# mount /dev/rbd0 /mnt/

[root@ceph4 ceph]# df -h

Filesystem?????????????? Size? Used Avail Use% Mounted on

devtmpfs???????????????? 467M???? 0? 467M?? 0% /dev

tmpfs??????????????????? 479M???? 0? 479M?? 0% /dev/shm

tmpfs??????????????????? 479M?? 13M? 466M?? 3% /run

tmpfs??????????????????? 479M???? 0? 479M?? 0% /sys/fs/cgroup

/dev/mapper/centos-root?? 50G? 1.9G?? 49G?? 4% /

/dev/mapper/centos-home?? 28G?? 33M?? 28G?? 1% /home

/dev/sda1??????????????? 497M? 139M? 359M? 28% /boot

tmpfs??????????????????? 479M?? 24K? 479M?? 1% /var/lib/ceph/osd/ceph-2

tmpfs???????????????????? 96M???? 0?? 96M?? 0% /run/user/0

/dev/rbd0?????????????? 1021M?? 33M? 989M?? 4% /mnt

刪除存儲池

[root@ceph4 ceph]# umount /dev/rbd0

[root@ceph4 ceph]# rbd unmap /dev/rbd/rbd/rbd1

[root@ceph4 ceph]# ceph osd pool delete rbd rbd --yes-i-really-really-mean-it

pool 'rbd' removed

免責聲明:本站發布的內容(圖片、視頻和文字)以原創、轉載和分享為主,文章觀點不代表本網站立場,如果涉及侵權請聯系站長郵箱:is@yisu.com進行舉報,并提供相關證據,一經查實,將立刻刪除涉嫌侵權內容。